Chapter 2 Introduction to Descriptive Statistics

Goal

The main goal of this chapter is to introduce “Descriptive Statistics” as a foundation for data analysis.

What we shall cover

By the end of this chapter you should:

- have an understanding on concept of location/relative standing (quantiles and percentiles), center (mean, median and mode), variability/spread (range, variance and standard deviation), skewness and kurtosis

- know how to graphically display descriptive summaries as an addition or alternative to displaying numerical summaries

2.1 Descriptive Statistics Overview

One of the first tasks a data analyst is tasked to do after a quick Exploratory Data Analysis is to describe variables in a given data. The main aim of this task is to understand information being passed by these variables, this is achieved by computing summaries of each variable and making visual displays. In this regard, when we say descriptive statistics, we mean numbers and graphs used to describe and summarize a given data.

There could be many descriptive statistics computed and/or graphed to describe an individual variable, but we often report the most informative descriptive statistic per variable.

So what are some of these descriptive statistics?

Consider a numerical variable like scores of students in a class room, for this particular variable, what information would be of interest to us? Won’t it be informative to know average scores, how about range between the highest and lowest score, or the percentage of students in the lower or upper bounds (we call these outliers), won’t they be informative. It could also be quite informative to visualize position of each score. Based on this, we would compute some values to give us this information, these values are what we call descriptive statistics of a variable. In our report, we would not include all of these summaries, only those we found to be informative. For example, if we did not have outliers, then we would not report it, we can simply report on the average. We would also not include graphs on individual observation if it did not show an interesting pattern (clustering or presence of outliers).

In this chapter, we will go over concepts in descriptive statistics (theoretically) and then follow up with a practical session. Our practical session will involve an actual data analysis of some data set followed up by a demonstration of how to write an analytical report.

With that in mind, for our concept building section, we shall discuss two quantiles (percentiles and quartiles ), three measures of central tendency or location (mean, median, and mode), four measures of spread or dispersion (range, inter-quartile range(IQR), variance, and standard deviation) and finally two measures of shape of a data distribution (skewness, kurtosis).

2.2 Measures of Descriptive Statistics

In this section we will begin by gaining theoretical knowledge on some of the most informative measures of descriptive statistics, these are:

- Quantiles: percentiles and quartiles

- Measures of central tendency: mean, median and mode

- Measures of spread/dispersion: range, inter-quartile, variance, and standard deviation

- Measures of distribution shape: skewness and kurtosis

In all these measures, we will discuss their numerical and graphical representation and follow-up with a demonstration on how they are computed in R.

2.2.1 Quantiles

In the most simplistic terms, quantiles are statistical measures which give values below and above a certain point. There are two commonly used measurements, these are percentiles and quartiles (this term is different from our title quantiles).

Quantiles are used to inform on data distribution, for example, we could say 90% of all callers to a customer care center were satisfied with services offered or most students scored between the second quartile (median/50%) and third quartile (75%). Saying this rather actual values or scores can be quite meaningful as we would get a general picture of where an individual value/score lies within a group of observations.

In general, we use quantiles when we want to describe an individual value in regards to other values.

2.2.1.1 Percentiles

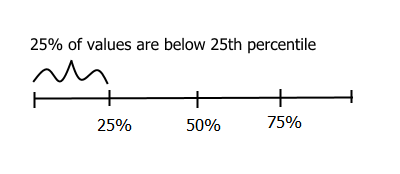

There are quite a number of definitions of percentiles, but the underlining concept behind them is that percentiles give a value below which a given percent of observations occur and the remaining percent of data occur above. To understand this, think of a number line with percentages from 1 to 99 (first value would be 1% and last value would be 99%), a score in the 25th percentile means there are 25% of the observations below it and 75% above it.

Twentyfifth percentile

With that understanding, suppose we were choosing a statistical program to use for our organization and we are told our preferred program R had a score of 286 out of a possible 300. This is good information but leaves us with a number of questions, top most being, how does 286 compare to scores for other programs. Percentiles can be handy here, but we need the entire data set to get a percentile. Therefore let’s use the following hypothetical data (distribution) to learn how to compute percentiles.

174 287 236 211 156 286 232 188 182 276 229First thing we want to do is order our data set from lowest to highest value.

156 174 182 188 211 229 232 236 276 286 287Then we want to compute proportions of each value, that is, get values between 0 and 1 of the same length with our data set. This should give us 0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9 and 1.

Our percentiles will be these proportions multiplied by 100. We can table this percentile as follows:

| Score | Rank | Percentile |

|---|---|---|

| 156 | 1 | 0 |

| 174 | 2 | 10 |

| 182 | 3 | 20 |

| 188 | 4 | 30 |

| 211 | 5 | 40 |

| 229 | 6 | 50 |

| 232 | 7 | 60 |

| 236 | 8 | 70 |

| 276 | 9 | 80 |

| 286 | 10 | 90 |

| 287 | 11 | 100 |

From this table we can easily see score of 286 at the 90th percentile. This is certainly much more informative than just saying R scored 286 out of 300.

Take note, percentile and percentage are two totally different terms. Saying someone scored 90 percent is not the same as being in the 90th percentile. As an example, there could be a number of scores like 85 to 92 in the 90th percentile but only a score of 90 percent can be 90 percent.

Computing percentiles in R

As mentioned before, we really do not need to memorize formulas or do manual computations, we just need to understand how to use them and then let statistical programs like R do the computation.

In R, to get percentile of any value in a given distribution, we first have to tell R which data we will be using, sort the data and identify index of interested value, get quantiles with function quantile and then subset output of quantiles with index of interested value. For the quantile function, we will input proportions of each value or probability of observing each value.

# Data

scores <- c(174, 286, 287, 236, 211, 156, 232, 188, 182, 276, 229)

# Index of interested score

rank <- which(sort(scores) == 286)

# Percentiles of all scores

p <- quantile(scores, probs = seq(0, 1, length.out = length(scores)))

p

## 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100%

## 156 174 182 188 211 229 232 236 276 286 287

# Percentile for interested score

cat("\n", names(p[rank]), "\n")

##

## 90%Please read up on function ?quantile in R to understand available algorithms for computing percentiles, there are nine of them.

2.2.1.2 Quartiles

Quartiles (with an “r” not an “n”) are similar to percentiles except that in quartiles we use fractions of the data instead of percentages. That is, both percentiles and quartiles divide data, however, percentiles divide data such that a certain percent of data lie below a give percent and the rest above while quartiles divide data such that a certain fraction of data lie above and the rest below. To understand these two terms better, let’s first get fractions of a data set and then see how they differ from percentiles.

To obtain sample fractions of a given data set we begin by ordering the data set or getting ordered statistics. These ordered statistics are the quantiles and their fractions can obtained by computing their proportion (each value divided by variable length minus 1).

\[fractions = \sum[\frac{x_i}{(n-1)}]\]

Where:

\(x_i\) = value \(n\) = number of observations

For our students scores data, we can compute their fractions as shown in the table:

## Quantile Sample_fraction

## 1 156 0.0

## 2 174 0.1

## 3 182 0.2

## 4 188 0.3

## 5 211 0.4

## 6 229 0.5

## 7 232 0.6

## 8 236 0.7

## 9 276 0.8

## 10 286 0.9

## 11 287 1.0Notice our fractions are different from our percentiles:

## Quantile Quartile Percentile

## 1 156 0.0 0

## 2 174 0.1 10

## 3 182 0.2 20

## 4 188 0.3 30

## 5 211 0.4 40

## 6 229 0.5 50

## 7 232 0.6 60

## 8 236 0.7 70

## 9 276 0.8 80

## 10 286 0.9 90

## 11 287 1.0 100There are four quarters often reported for a variable, this quarters as the name suggest partition data into four equal parts. There quarters can be quite informative as it can show unique features of the data like data concentration and isolated values at extreme points (outliers). It might not be appropriate to compute quartiles if data is multi-modal (it has more than one data concentration), but let’s discuss this limitation when we are discussing mode under measures of central tendency.

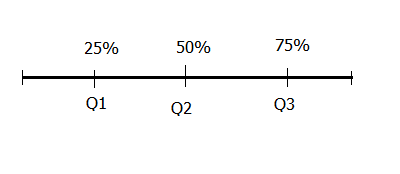

There are three cut-off points that divide a data set into four equal parts, these are Q1 (first quartile), Q2 (second quartile), and Q3 (third quartile). Q1 splits the lowest 25% of data from the highest 75% of data, this is the same as the 25th percentile. Q2 splits data into halves, this is the same as the 50th percentile or as we shall discuss later, the median of a distribution. Q3 splits top 25% of data from lower 75% of data, this is the same as the 75th percentile.

Quantiles: Percentiles and Quartiles

Going back to our scores data set, looking at the fractions for our quantiles (ordered statistics), we cannot find a value where 25% of data are below and 75% are above, we also can’t find a value where 25% of data are above and 75% are below. However we can find a value where 50% are above and 50% are below, this is score 229. We therefore can get Q2 but not Q1 and Q3.

To get these missing values we need to use a mathematical concept called linear interpolation. Linear interpolation simply means getting new data point given some values.

In our case, the first new point we want is a score that cuts off data such that 25% are above and 75% are below. Looking at our table with quantiles and their fractions, we see 0.25 (25/100) is between 0.2 and 0.3, so we know the score we seek is between 182 and 188. We now need to interpolate this score using these four pieces of information.

To interpolate this score, we need to determine type of change as well as rate of this change 2. Change between these two points is an increase as scores increased from 182 to 188 (difference of 6) and fractions increased from 0.2 to 0.3 (difference of 0.1). We can compute rate of increase by dividing change in scores by change in fractions, that is 60. Since the score we seek is between 182 and 188, then we expect rate of increase from score of 182 with fraction of 0.2 to this unknown score with a fraction of 0.25 to be a fraction of 60. This fraction is exactly the difference between 0.25 and 0.2 which is 0.05. So, rate of change from point 0.2,182 to our unknown point is 3, if we add this to 182 we get 185. We can therefore conclude that the score that cuts off values such that 25% are below and 75% are above is 185.

Using the same line of reasoning, we can establish that 256 (236 + (0.75 - 0.7) * ((276 - 236)/(0.8 - 0.7))) cuts off values such that 25% are above it and 75% are below it.

Let’s look at how to compute these values in R.

Getting quartiles in R

In R, we can still use function quantiles to get our quartiles, in these case inputting proportions for the three quantiles:

quantile(scores, seq(0.25, 0.75, 0.25))

## 25% 50% 75%

## 185 229 256We can also get this and other information using function “summary” and “fivenum”. Note function “fivenum” means Tukey’s five number summary, it’s output is unnamed vector, it can be useful for additional computation.

summary(scores)

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 156.0 185.0 229.0 223.4 256.0 287.0

fivenum(scores)

## [1] 156 185 229 256 2872.2.1.3 Graphical Display for Quantiles

There are about four graphs used to display quantiles, these are:

- Box plot

- QQplots

- Empirical Shift function plots and

- Symmetry plots

On this section we will look at the first two displays.

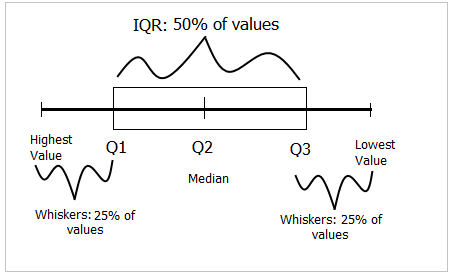

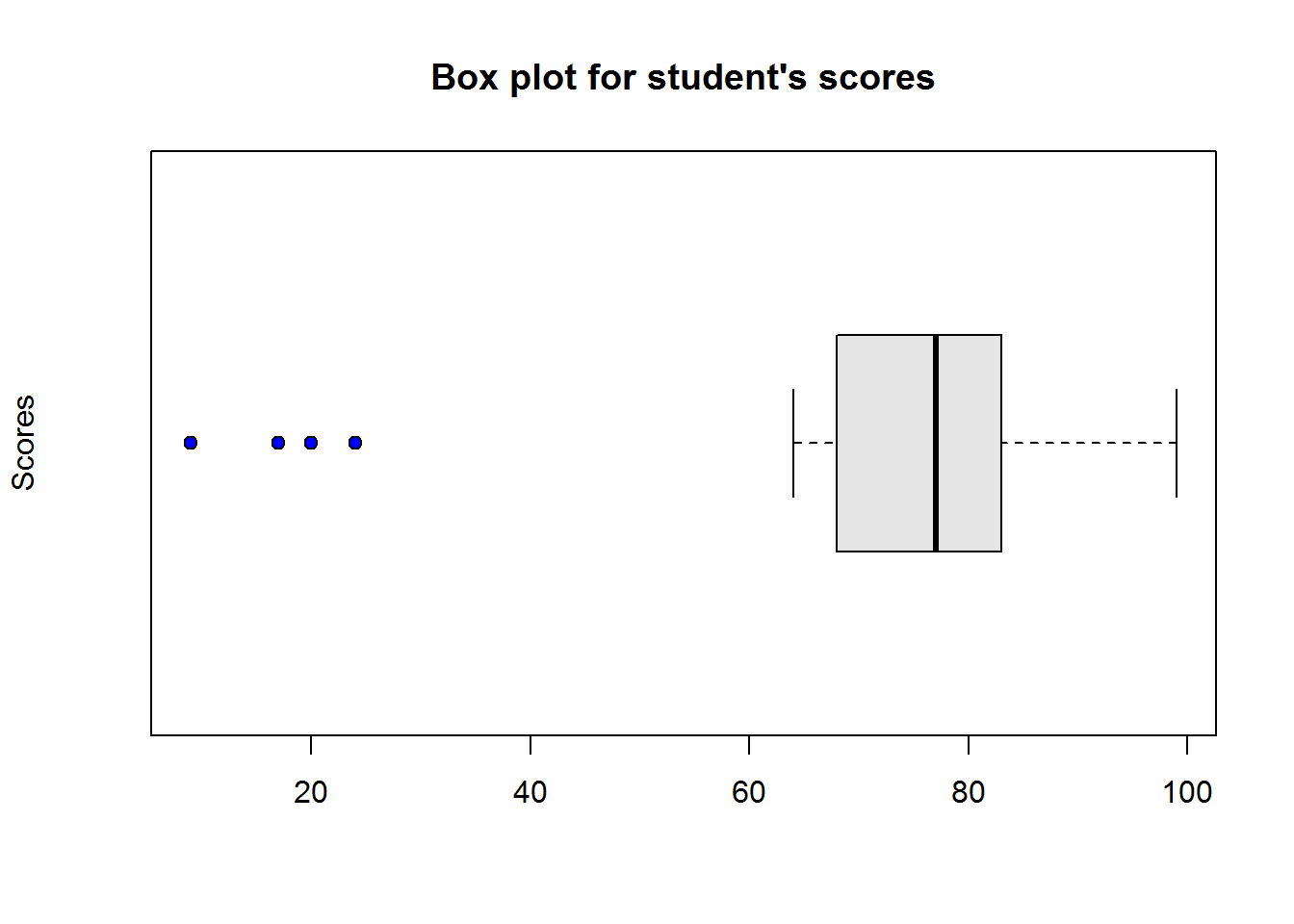

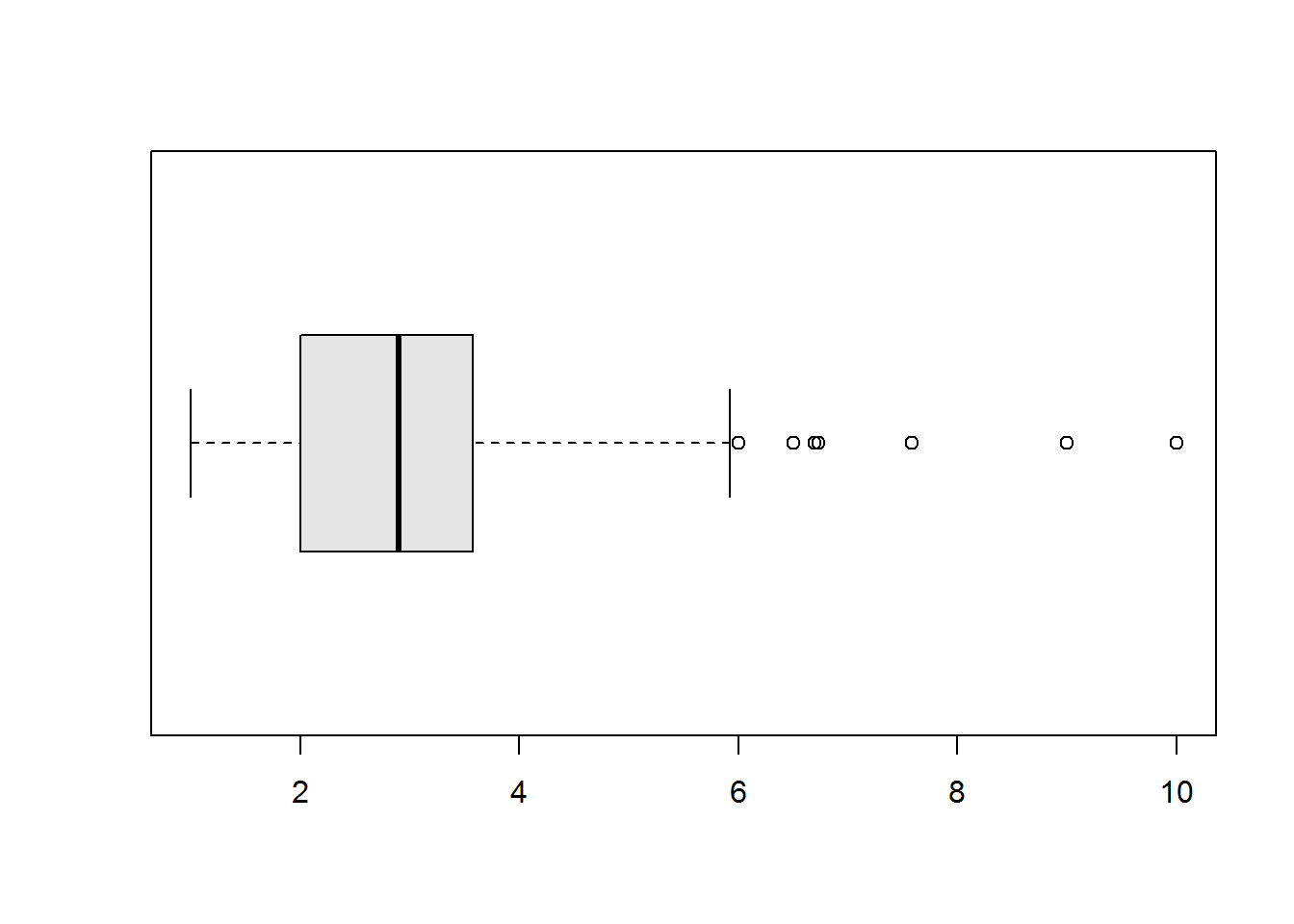

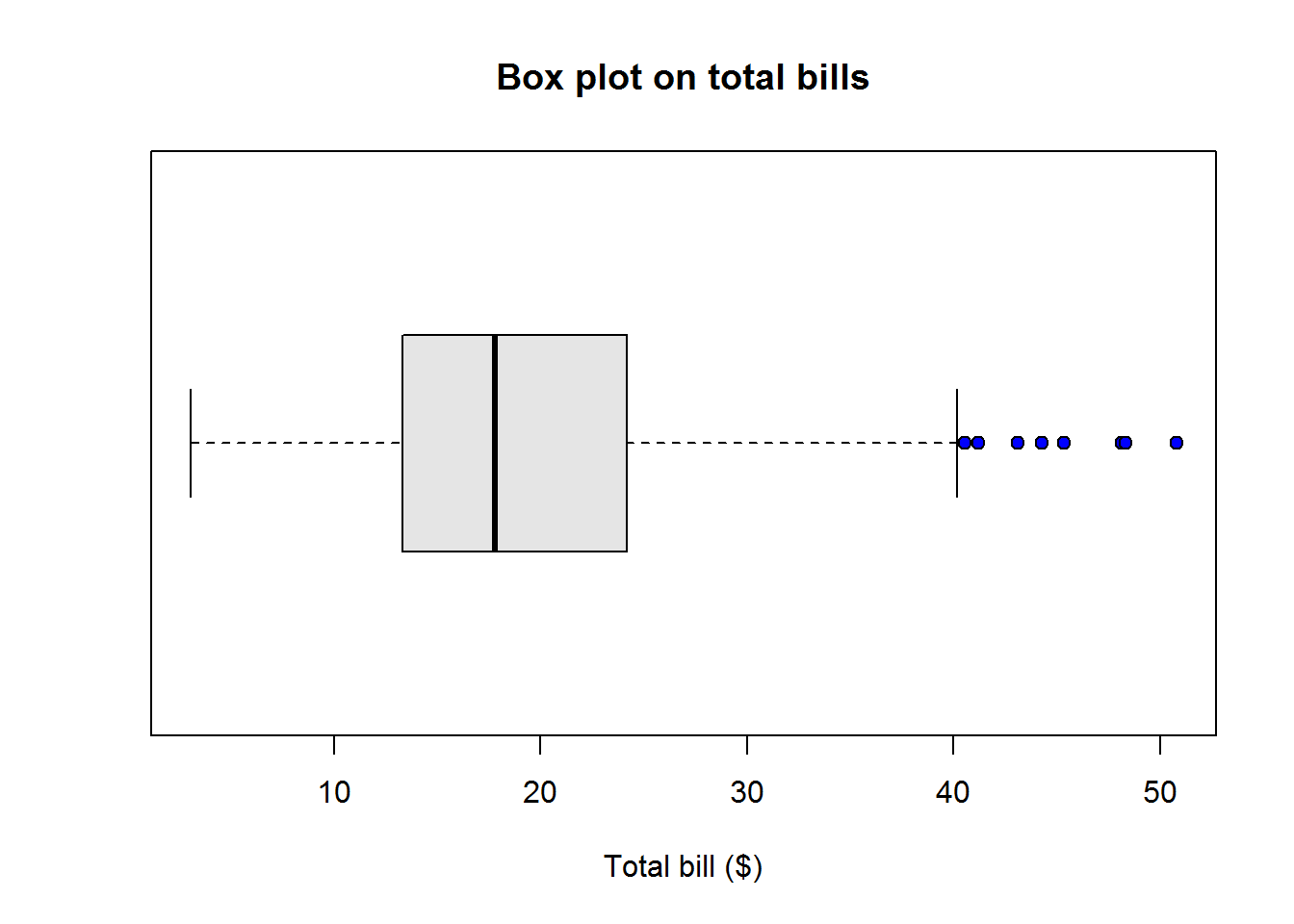

Box plots

Box plots or more appropriately box-and-whisker plots are one of the most informative graphical displays for distribution even though they have of late been superseded by fancier displays; their simplicity make them stand test of time.

Box-and-whisker plots are best used to show outliers (values occurring at extreme points) and comparing two or more distributions.

To draw a box-and-whiskers plot, draw a box from Q1 to Q3 noting Q2 with a vertical line. This box is called Inter-quartile Range (IQR) and it represents 50% of data (75% - 25%). Draw whiskers as lines extending 1.5 times IQR below Q1 and above Q3.

Box-and-whiskers plot

Value 1.5 is an arbitrary number with no specific meaning behind it, however, it but serves it’s purpose in identifying outliers.

Constructing box-and-whisker graphs by hand

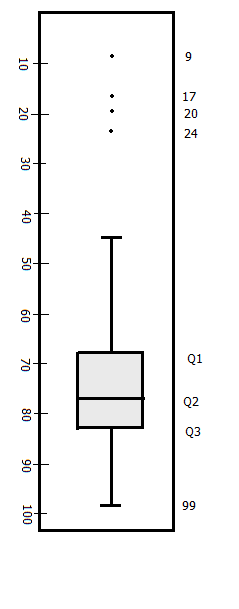

As an example, suppose we had the following hypothetical values for students scores;

## 80 77 83 64 80 81 75 83 71 86 81 76 68 84 70 24 17 9 20 99 97To draw a box-and-whiskers plot for this distribution, we first get it’s quartiles:

## 25% 50% 75%

## 68 77 83Then we compute IQR and whiskers length. We compute whiskers as 1.5 times IQR below Q1 and above Q3. Whiskers are lines extending from both ends of Q1 and Q3, they are referred to as lower and upper whiskers. IQR is computed as the difference between Q3 and Q1.

For our hypothetical data set, IQR = 15. Our whiskers are computed as:

Lower whisker

1.5 time IQR is 22.5. Subtracting 22.5 from Q1 which was 68 we get 45.5. Our lower whisker will extend from Q1 to 45.5, all values below this are outliers, these are 9, 17, 20 and 24.

Upper whisker

For the upper whisker, we will add Q3 (83) to 22.5 giving us 105.5. Since we do not have scores above 99, then we will draw our whisker from Q3 to our highest score which is 99.

Within this information, we can now draw our box plot.

Box plot for scores

Using R to plot box-and-whiskers

In R, plotting box-and-whisker is just one function call, “boxplot”.

boxplot(scores2, col = "grey90", ylab = "Scores", pch = 21, bg = 4, horizontal = TRUE)

title("Box plot for student's scores")

Figure 2.1: Box plot in R

Interpreting box-and-whisker plot

From our plot, it’s clear to see most students performed well as they clustered around average score of 77, however, there are four students who performed worse than other students.

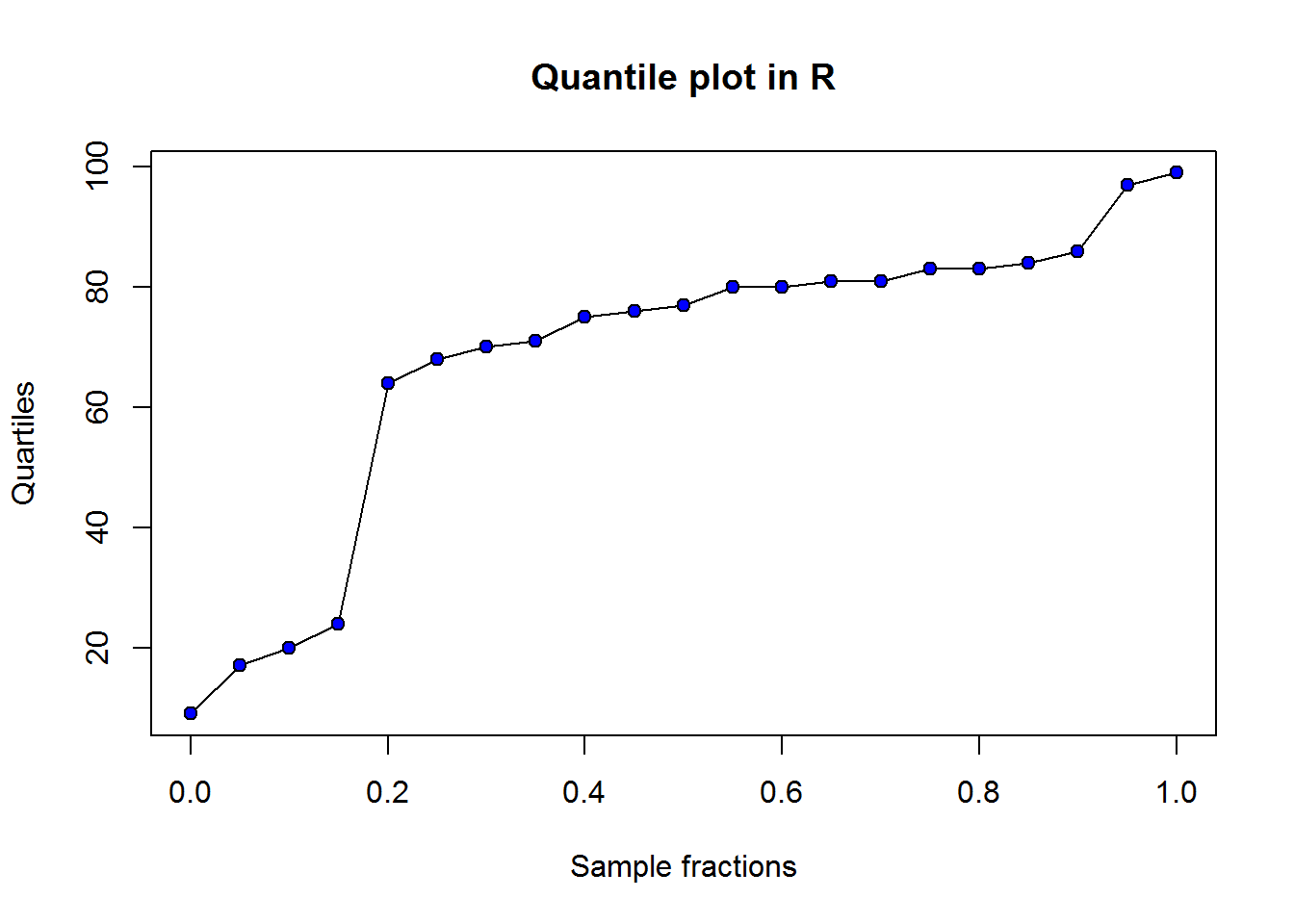

Quantile plots

These plots display sample fractions against quartiles they correspond to. To draw these we just need to compute the fractions and plot them.

Using our scores data set, we can get the following fractions:

## [1] 0.00 0.05 0.10 0.15 0.20 0.25 0.30 0.35 0.40 0.45 0.50 0.55 0.60 0.65

## [15] 0.70 0.75 0.80 0.85 0.90 0.95 1.00We will plot fractions we have just computed on the x-axis and our ordered scores/quartiles on the y-axis. We will plot a line passing though all points.

Due to interpolation, drawing this plot by hand might not be a good idea, therefore we will use R.

Quantile plots in R

There is no function to call for this plot, but since it is a line graph, standard “plot()” should do the trick.

plot(quants, sort(scores2), type = "l", ann = FALSE)

title("Quantile plot in R", xlab = "Sample fractions", ylab = "Quartiles")

# Add points to show how linear interpolants

points(quants, sort(scores2), pch = 21, bg = 4)

Figure 2.2: Q-plot in R

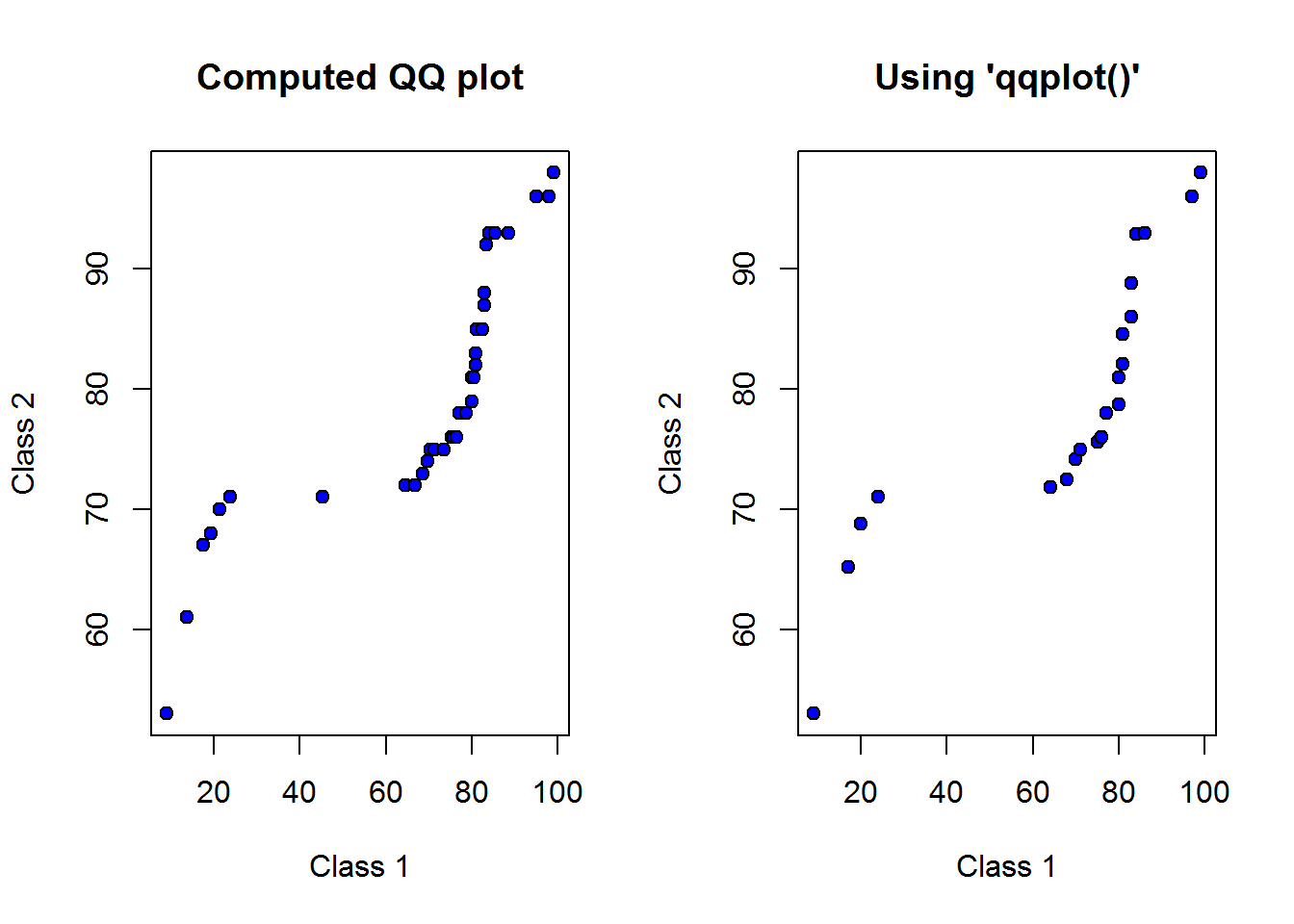

2.2.1.3.1 Quantile-Quantile (QQ) Plots

QQ plots are graphical displays for comparing two data sets, these data sets can either be two observations or one observation and one theoretical data set. Quantiles of observation one are plotted against quantiles of observation two/theoretical data set. Patterns of these points are used to

- Assess whether distributions being compared are similar

- Compare shapes of distribution

- Assess goodness of fit

As an example, let’s add a second class scores with these values.

## 93 81 75 78 53 70 78 92 76 98 67 76 82 74 61 72 71 93 73 68 93 83 85 96 81 79 76 72 87 75 71 75 96 85 88We want to compare this distribution with that of our first class. To do this we compute sample fractions of both observations. But before we do that, take note these two classes do not have the same size, class one has 21 scores and class two has 35. Since we want to plot them on the same axis, we need to standardize their axis by taking number of fractions for each sample to be equal to highest value between the two observations.

Therefore, our first task is to get highest number between the two observations which is 35 (length of second class), then we compute there fractions. This should give us 0, 0.03, 0.06, 0.09, 0.12, 0.15, 0.18, 0.21, 0.24, 0.26, 0.29, 0.32, 0.35, 0.38, 0.41, 0.44, 0.47, 0.5, 0.53, 0.56, 0.59, 0.62, 0.65, 0.68, 0.71, 0.74, 0.76, 0.79, 0.82, 0.85, 0.88, 0.91, 0.94, 0.97 and 1.

Now we can get quantiles for our classes using our computed fractions. Since we are using sample size for the second class (35), then quantiles for second class would simply be ordered statistics of it’s class scores. However, for the first class we need to interpolate their quantiles. We have seen how to interpolate these values, hence we will use R to make our work easier.

Using R

Let’s compute quantiles for scores of first and second class.

# Get number of fractions

n <- max(length(scores2), length(scores3))

# Compute quantiles

quantileClass1 <- quantile(scores2, seq(0, 1, length.out = n))

cat("Quantiles for first class:\n", quantileClass1, "\n\n")

## Quantiles for first class:

## 9 13.70588 17.52941 19.29412 21.41176 23.76471 45.17647 64.47059 66.82353 68.58824 69.76471 70.47059 71.23529 73.58824 75.23529 75.82353 76.41176 77 78.76471 80 80 80.35294 80.94118 81 81.23529 82.41176 83 83 83.47059 84.11765 85.29412 88.58824 95.05882 97.82353 99

quantileClass2 <- quantile(scores3, seq(0, 1, length.out = n))

cat("Quantiles for second class:\n", quantileClass2)

## Quantiles for second class:

## 53 61 67 68 70 71 71 72 72 73 74 75 75 75 76 76 76 78 78 79 81 81 82 83 85 85 87 88 92 93 93 93 96 96 98We can now plot these two samples using plot function. But you should know that R has a handy function which we can call with our two distributions and it will do all the calculations and then make QQ plots for us. This function is “qqplot”.

Let’s compare qqplots generated using our computations and those from qqplot function.

op <- par("mfrow")

par(mfrow = c(1, 2))

plot(quantileClass1, quantileClass2, ann = FALSE, pch = 21, bg = 4)

title("Computed QQ plot", xlab = "Class 1", ylab = "Class 2")

qqplot(scores2, scores3, ann = FALSE, pch = 21, bg = 4)

title("Using 'qqplot()'", xlab = "Class 1", ylab = "Class 2")

par(op)

## NULLThese plots look the same, now we need to interpret it.

Interpreting QQ plots

There are at least three distribution properties a QQ plot can tell us. This are, skewness, tailness and modality. It should however be noted that distributions with small sample size are not often clear as in our case (sample size of 21 and 35).

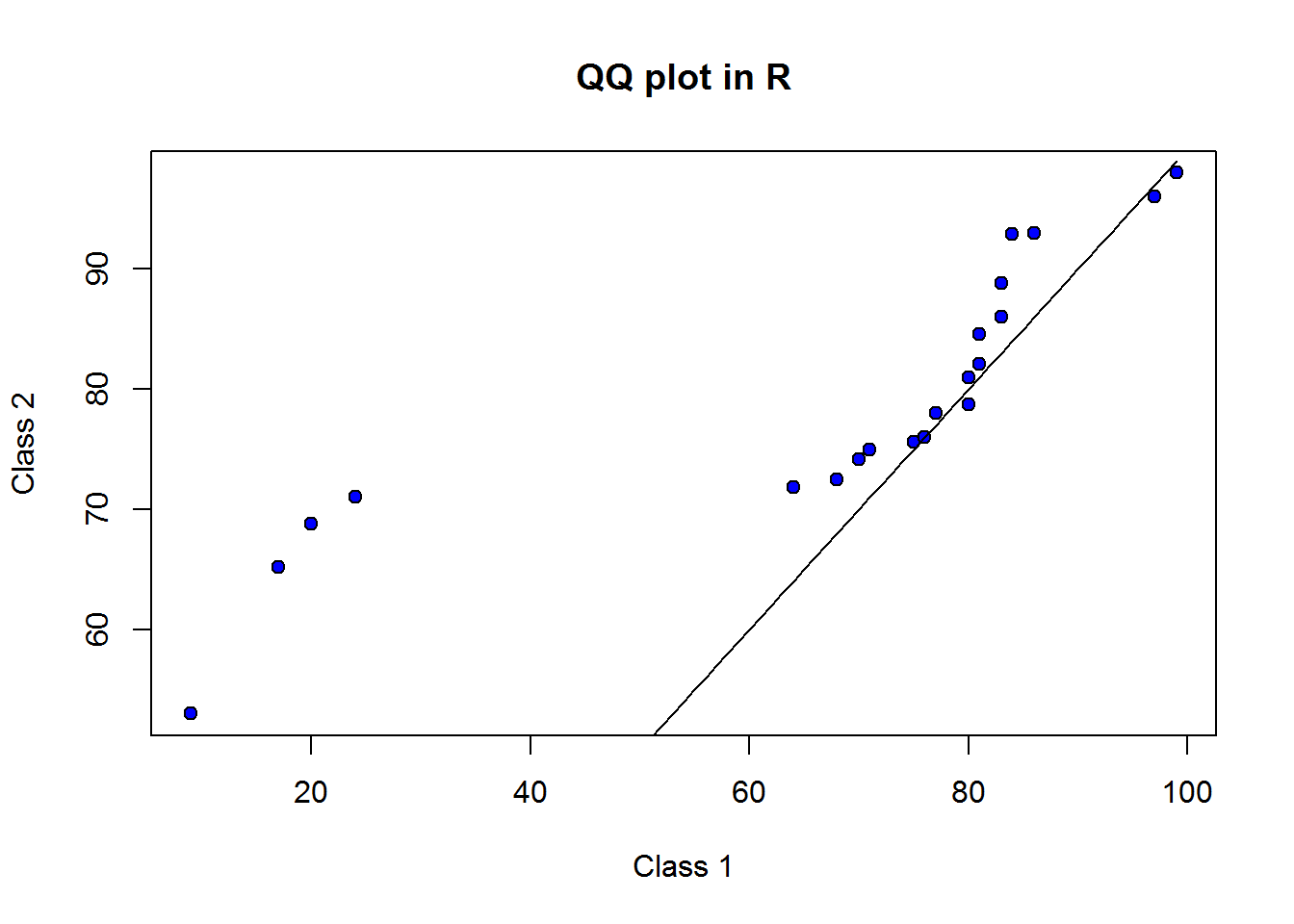

In general, if points on a QQ plot lie on the line x=y, then the two distributions are said to be similar. If the points form a line but not necessarily lie on x=y, then they are said to be linearly related and generally come from the same probability distribution. We will discuss probability distributions and their implications in chapter three. Given this information, let’s add x=y line to our plot.

qqplot(x = scores2, y = scores3, xlab = "Class 1", ylab = "Class 2", main = "QQ plot in R", pch = 21, bg = 4)

lines(x = 1:99, y = 1:99)

To draw x=y line we used function line parsing to it values forming x=y. From this line we know that scores of class one and class two do not form a linear relationship. We can thus conclude they do not have similar distributions.

Though not to clear (due to small sample size), there seems to be a bi-modal (two peaks) given the fact that we see a sort of “s” shape. These two peaks are concentration of points at point 20,70 and 80,80.

Something we can see from our graph are tailness or isolated values at extreme point, this could be an indicator of outliers (values away from expected).

It is useful to note QQ plots are not reported, they are more applicable as an Exploratory Data Analysis technique (an analysts tool so to say), that is, they are more suitable in guiding data analysis rather than being a finding to be reported.

We shall revisit QQ plots when discuss probability distribution, at that point we would have discussed some of the issues we have mentioned like skewness and modality.

2.2.2 Measures of Central Tendency

To best understand measures of central tendency or location, think of our first example on scores, we wanted to make an informed select of one statistical program among a number of programs. To this end we were told our preferred program, R, scored 286 out of 300. From our discussion on quantiles we discovered that this meant it was in the 90th percentile. That’s certainly good information, however, if you are an astute analyst, then you would want to know where the other programs are located in the distribution. More specifically, you would want to know distance of 286 from center of the distribution. Measure of central tendency is the answer to this. They summarize data to a single useful and representative information.

There are three commonly used measures of central tendency, these are “mean”, “median” and “mode”. In this section we get to look at each one of them while noting their applicability.

2.2.2.1 Mean

Mean and specifically arithmetic mean indicates center of a distribution. It’s computed as sum of all values divided by number of values. So, if you have a variable, mean is the summation of all values in that variable divided by number of elements in that variable. Mean is more appropriate for numerical variables (discrete 3 and continuous variables 4), but not qualitative or categorical data.

2.2.2.1.1 Mean for numeric data

Going back to our first example on scores on statistical programs, we compute mean as total of all values divided number of all values, that is, sum of divided by 11, giving us 223.3636364.

Mathematical notation

Based on the notion that mean is the sum of all values divided by number of values, then, given values x1, x2, x3, …, Xn, mean is:

\[\frac{x_1 + x_2 + x_3 + ... + x_n}{n}\]

This is mathematically expressed as:

\[\bar{x} = \frac{\sum {x_1, x_2, x_3, ..., x_n}}{n}\]

Where:

\(\bar{x}\) is Sample mean \(\sum\) is Greek capital letter sigma meaning “sum of” \(n\) is sample size

This mathematical expression is often reduced to:

\[\bar{x} = \frac{\sum{x}}{n}\]

In statistics, it’s important to distinguish between population parameters 5 and sample statistics 6. Mathematical expression given above is a sample statistic, if we were dealing with entire population, then population mean would be given by:

\[\mu = \frac{\sum{X}}{N}\]

Where:

\(\mu\) is population mean \(X\) are observations \(N\) is population size

Computing Mean in R

Getting mean in R is just one function call, we use function “mean”.

mean(scores2)

## [1] 67.85714Do take note, if data contains missing values or NA’s, you need to tell R by setting argument “na.rm” to TRUE, otherwise output would be NA.

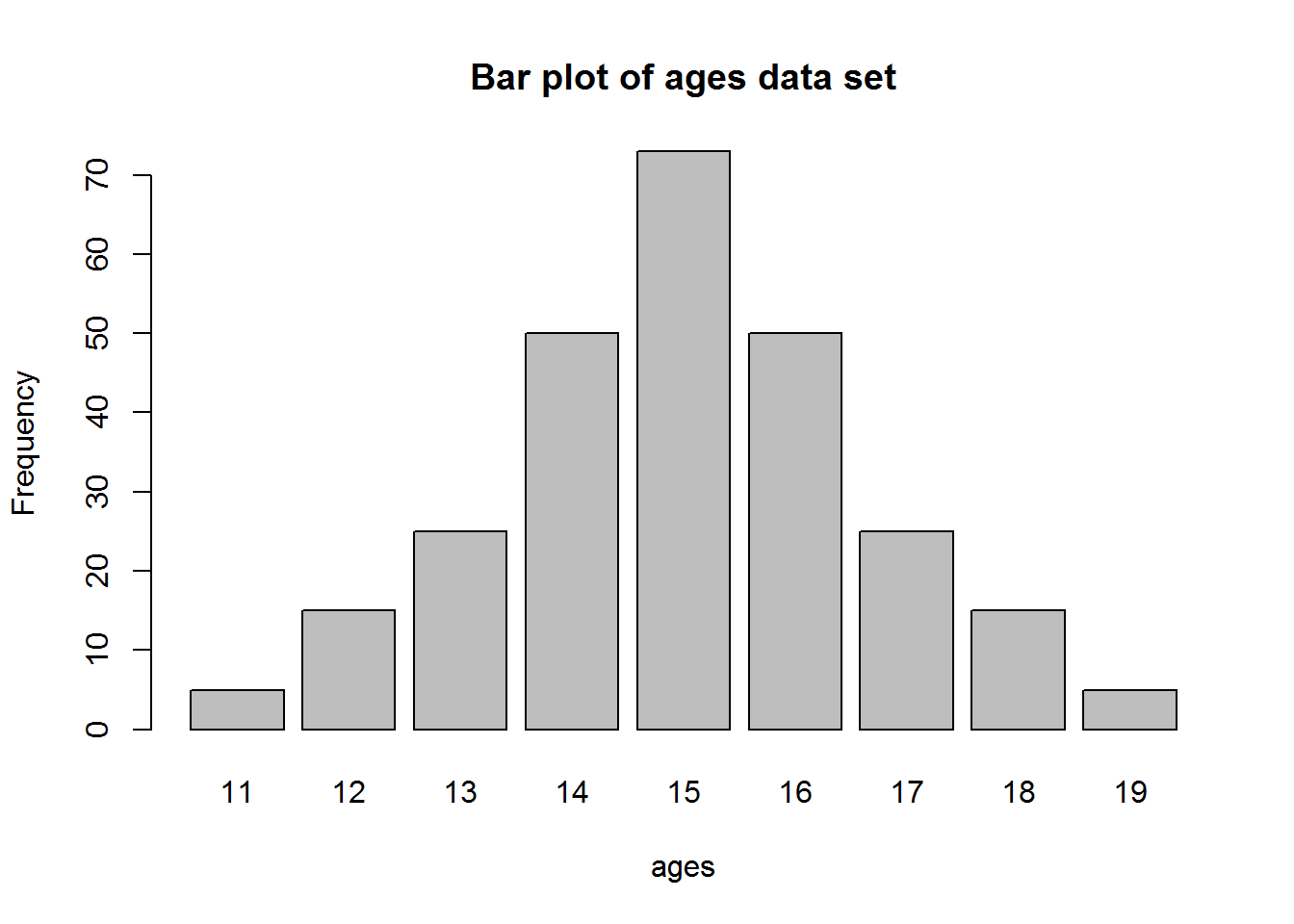

Univariate Frequency Distributions

When we have a discrete variable with few unique values or continuous variable with known ranges, then it useful to convert them grouped data.

Grouping data involves categorization or batching together observations. Grouping not only helps describe similar observations but it also helps to see underlying distribution like average, spread, skewness, modality or peakness, and extreme or isolated values.

Grouped data is often presented in frequency tables. This table can be used for grouped and ungrouped data. Ungrouped data are often unique vales of a discrete variable. Frequency tables are also called frequency distributions as they tabulate frequencies along side their corresponding observation. Frequency is the number of times an observation occurs.

With that understanding, let’s look at two examples of frequency distributions, one will be for ungrouped data and the other for grouped data.

For our first example on ungrouped data, let’s consider the following data set, it’s a list of responses to a question asked to analyst on how many times they have used R in the last week.

{0, 0, 1, 2, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, 5, 5, 5, 5}

From this data, we can see there are a number of repetitive values or few distinct values. Based on this fact, we can summarize these data by counting number of occurrence of each unique value (0, 1, 2, 3, 4 and 5) and tabulate them as follows.

| Usage | Frequency |

|---|---|

| 0 | 2 |

| 1 | 1 |

| 2 | 1 |

| 3 | 6 |

| 4 | 11 |

| 5 | 9 |

What we have just created is an ungrouped frequency table.

Now let’s look at grouped frequency distributions.

Suppose we have the following data on number of years some of the most popular programs have been in existence:

{1, 4, 6, 7, 7, 8, 9, 12, 12, 12, 13, 15, 15, 16, 17, 17, 18, 19, 19, 19, 20, 20, 21, 21, 22, 22, 23, 23, 24, 25}

There are few terms or concepts we need to appreciate as we construct frequency tables for grouped distributions. These are:

- Class: Range of values like “1-5” or “6-7”. These can also be considered as sub-set of a data distribution.

- Class size: It is the number of values in a class, for example a class of “1-5” has 5 values 1, 2, 3, 4, and 5.

- Class limits: These are the minimum and maximum values of a class, for example 1 and 5 for class “1-5”. These values can be specified as upper and lower limits.

- Class boundaries: These are also called true class limits and computed as an average of sum of lower limit of one class and upper limit of a subsequent class. As an example, if we have three classes “1-5”, “6-10” and “11-15”, we can compute class boundaries for the first two classes as (5 + 6)/2 = 5.5 and (10 + 11)/2 = 10.5. Notice, we are adding half a point to each upper boundary, therefore, we can just do the same to other classes beginning from before first class and ending right after last class; that is 0.5, 5.5, 10.5, and 15.5.

- Class width/interval: These are difference between upper and lower boundaries of any class for example 5 which is 5.5 - 0.5. It’s also the lower limits of two consecutive classes or the upper limits of two classes like 5 which results from 6 - 1.

- Class mark/midpoint: This is the middle value in a class. It’s computed as an average of upper and lower limits of a class or difference of upper and lower boundaries.

When constructing a frequency distribution table there are a few issues that need to be agreed on, these include number of classes and class width. It’s important to ensure we do not have too many or too few classes as it will obscure certain feature of our distribution or make it hard for us to interpret frequency distribution. It’s also important for us to consider class width as we do not want to end up with too many empty classes than necessary.

There are couple of formulas out there on estimating class size/width, there are also those that recommend class sizes of either 2, 5, or 10. I suggest using the latter recommendation but guided by data. For example, for our data which has values from 1 to 25, we might want to have a class width of 5 thereby having a total of 5 classes. Taking classes of width 2 might make our frequency distribution too big as we would have about 12 classes and some leftover. Having class width of 10 on the other hand would mean having only two or three classes, this might be a bit small. So our 5 classes seams ideal.

Now that we know how many classes we will have and their width, we can construct our classes bearing in mind that they need to be unique (a value can only have one possible class it belongs to). Based on this we can have the following classes and number of observations that fall in those classes.

| Years | Frequency |

|---|---|

| 1 - 5 | 2 |

| 6 - 10 | 5 |

| 11- 15 | 6 |

| 16- 20 | 9 |

| 21- 25 | 8 |

What we have above is a grouped frequency distribution table.

With this brief introduction to frequency distributions, let’s now see how to compute their descriptive statistics.

2.2.2.1.2 Mean for frequency distributions

In this section we will look at how to compute averages for ungrouped and grouped distributions.

2.2.2.1.3 Mean for ungrouped distributions

To learn how to compute mean for ungrouped distributions, let’s build up from our understanding of mean for non-frequency distribution. For these non-frequency distributions, we defined mean as sum of all values divided by number of values. Now, for ungrouped mean, we need to begin by reconstructing number of values by multiplying observations by their frequencies, and then sum them up before dividing by number of values or total frequencies.

As an example, let’s revisit our data on responses from analyst.

| Usage | Frequency |

|---|---|

| 0 | 2 |

| 1 | 1 |

| 2 | 1 |

| 3 | 6 |

| 4 | 11 |

| 5 | 9 |

We compute mean for this data by multiply each usage (observation/value) with it’s frequency, then sum them up and finally divide by number of responses (number of analysts); that is,

\[\frac{(0*2) + (1*1) + (2*1) + (3*6) + (4*11) + (5*9)}{2+1+1+6+11+9}\]

We should get mean as 3.6666667. Based on this finding we can conclude that, on average analysts in our organization used R about 3.7 times last week.

We can mathematically express this computation as:

\[\bar{x} = \frac{{\sum\limits^{n}_{i=1}}{f_ix_i}}{\sum\limits^{n}_{i=1}{f_i}}\]

Where:

n = number of unique observations or number of rows in frequency table f = frequency x = an observation in a frequency table

or simply as:

\[\bar{x} = \frac{\sum{fx}}{\sum{f}}\]

Computing mean for ungrouped distribution in R

In R, we can compute mean for distribution with “mean” function. To generate a frequency table we use function “table”. table() does not produce a very presentable table, so we will transform it into a data frame with “as.data.frame” function thereby giving us a table similar to what we manually constructed.

## [1] 3.666667

## ungpd1

## 0 1 2 3 4 5

## 2 1 1 6 11 9

## Usage Freq

## 1 0 2

## 2 1 1

## 3 2 1

## 4 3 6

## 5 4 11

## 6 5 92.2.2.1.3.1 Mean for grouped distributions

Let’s use our second example on frequency distribution to compute mean for a grouped distribution. This was our distribution:

| Years | Frequency |

|---|---|

| 1 - 5 | 2 |

| 6 - 10 | 5 |

| 11- 15 | 6 |

| 16- 20 | 9 |

| 21- 25 | 8 |

We have just discussed mean for ungrouped mean as summation of products of observations and frequencies divided by total frequency. We are going to use this definition with a slight amendment and that’s what we consider to be our observations.

When we were dealing with ungrouped data it was easy for us to recreate our original values by multiplying observations by their frequencies, however, for grouped distributions we can’t do this. This is because we can simply not know exact value of any frequency within a class (range of values). So what we can do is go for the next best thing which is an estimate. This estimate is a class midpoint or a class mark. Once we have these mid points, then we can compute mean just as we did with ungrouped data.

To show each computation, let’s use our frequency table and add column on midpoint and product of midpoints and frequencies.

| Years | Midpoints | Frequencies | Product |

|---|---|---|---|

| 1 - 5 | 3 | 2 | 6 |

| 6 - 10 | 8 | 5 | 40 |

| 11 - 15 | 13 | 6 | 78 |

| 16 - 20 | 18 | 9 | 162 |

| 21 - 25 | 23 | 8 | 184 |

| Total | 30 | 470 |

Mean for this distribution is thus 470 divided by 30 which is 15.6666667. We can therefore conclude that average number of years statistical packages have been in existence is about 15.7 years. We can use this average with number of years R has been in existence which is 24 (from 1993 to 2017); looks like R has some mileage over most programs (hypothetically speaking).

There two things we need to appreciate as we conclude this section on mean for grouped data, these are;

- Mean for grouped data is an estimate: Unlike mean for ungrouped distributions, mean for grouped data is an approximation as it uses midpoints rather than actual values/observations. It is therefore important to collect responses with ungrouped values as it is easier to group observations during data analysis than it is to reconstruct actual values from classes.

- Don’t use mean for frequency distributions with open groups: Open groups like “15+” or “65 and above”, should use mode as a measure of central tendency. This is because it is not possible to compute midpoint for an infinite class.

Computing grouped mean in R

Unfortunately there is no one function for calculating grouped mean in R so we have to go through a number of steps to compute this mean.

# Data

years <- factor(c("0-5", "6-10", "11-15", "16-20", "21-25"), ordered = TRUE)

freq <- c(2L, 5L, 6L, 9L, 8L)

gpd1 <- data.frame(Years = years, Freq = freq)

gpd1

## Years Freq

## 1 0-5 2

## 2 6-10 5

## 3 11-15 6

## 4 16-20 9

## 5 21-25 8

# Number of observations

n <- sum(gpd1$Freq)

# Midpoint

midpoint <- c((5+0)/2, (10+6)/2, (15+11)/2, (20+16)/2, (25+21)/2)

gpd1[3] <- midpoint

names(gpd1)[3] <- "Midpoint"

gpd1

## Years Freq Midpoint

## 1 0-5 2 2.5

## 2 6-10 5 8.0

## 3 11-15 6 13.0

## 4 16-20 9 18.0

## 5 21-25 8 23.0

# Product of midpoints and frequency

gpd1[4] <- gpd1$Freq * gpd1$Midpoint

names(gpd1)[4] <- "Product"

gpd1

## Years Freq Midpoint Product

## 1 0-5 2 2.5 5

## 2 6-10 5 8.0 40

## 3 11-15 6 13.0 78

## 4 16-20 9 18.0 162

## 5 21-25 8 23.0 184

# Mean

sum(gpd1$Product)/n

## [1] 15.633332.2.2.2 Median

Median is basically the middle observation in an ordered distribution. To get this middle value, we have to determine if distribution has an even or an odd number of observations.

2.2.2.2.1 Median for odd numbered distributions

For odd numbered observations, middle value is rather easy to locate, it is that value which splits a distribution such that there are equal number of values before it and after it. For example, a distribution with 21 observation would have the eleventh observation as it’s median since there ten values before it and another ten after it. Basically , median for an odd numbered distribution is number of observations divided by two and then raised to the nearest whole like 21/2 = 10.5, 10.5 to nearest whole is 11.

Using this reasoning we can generate our own formula for computing median for odd numbered distribution:

\[Median_{(odd)} = data[round(\frac{n}{2})]\]

Where:

data = distribution [] = subset notation round = raise number to next whole number (digits = 0) n = number of observation in distribution

Now let’s get median for our data on scores for statistical programming languages.

First we order our data from the lowest value to the highest value:

## 156 174 182 188 211 229 232 236 276 286 287Since number of elements in this data set is odd (11), we can use our formula that is, median is round(11/2) which is,229.

Computing median in R

In R, median for numerical distribution is one function call whether it’s an odd numbered distribution or even.

median(scores)

## [1] 2292.2.2.2.2 Median for even numbered distributions

For even numbered distributions, median is an average of the two middle values. For example, a distribution with 20 observations would have it’s median as an average of the tenth and eleventh observation.

To get these two middle values, we get half the number of distribution like 20/2 and half the number of distribution plus one like (20/2 + 1).

As before, we can generate our own formula for computing median for even numbered distribution as:

\[Median_{(even)} = \frac{{data[\frac{n}{2}]+data[\frac{n}{2}+1]}}{2}\]

Where:

data = distribution [] = subset n = number of observation in the distribution

Now, using our scores data set, let’s add a score of 234 to make an even numbered distribution. This is how it looks when ordered:

## 156 174 182 188 211 229 232 234 236 276 286 287Our data now has twelve values, using our derived formula, we can compute median as

\[Median_{(even)} = \frac{scores[\frac{12}{2}]+scores[\frac{12}{2}+1]}{2}\]

This should output 230.5.

Another way to look at median for even numbered distribution is number of distribution (n) plus one divided by two.

\[Median_{(even)} = \frac{n + 1}{2}\]

Above formula is certainly simpler but not as intuitive as our formula.

2.2.2.2.3 Median for frequency distributions

Like median for non-frequency distribution, computation for median for frequency distributions depends on whether total frequency is odd or even.

Since we now know difference between median for odd and even numbered distribution, in this section we will focus on getting to locate median of frequency distribution by using our two data sets on responses from analysts and years statistical programs have been in existence.

By and large, median for ungrouped and grouped distributions go through the same processes. We first determine if we are dealing with an odd or an even numbered distribution by getting sum of all frequencies, then using appropriate formula, compute location of median, and finally identify observation or class containing the median by cummulating frequencies.

Let’s see how this actually works.

2.2.2.2.3.1 Ungrouped distributions

Using our data analyst response data, let’s determine its median.

We begin by finding out if it’s an odd or even numbered distribution by summing frequencies (2, 1, 1, 6, 11, and 9). This should give us 30, an even number.

Since it’s an even number we will use our second formula to locate position of our median. Median is the observation at position 15.5 ((30+1)/2).

To identify observation at this position we need to generate cumulative frequencies and the best way to do this is to add a column to our frequency distribution table.

| Usage | Frequency | Cumulative frequency |

|---|---|---|

| 0 | 2 | 2 |

| 1 | 1 | 3 |

| 2 | 1 | 4 |

| 3 | 6 | 10 |

| 4 | 11 | 21 |

| 5 | 9 | 30 |

From our cumulative frequencies, we can see 15.5 is in the fourth observation, hence median is 4.

Locating median for ungrouped distributions in R

Median for ungrouped distributions is computed the same way as non-frequency distributions, using function “median”.

ungpd1

## [1] 0 0 1 2 3 3 3 3 3 3 4 4 4 4 4 4 4 4 4 4 4 5 5 5 5 5 5 5 5 5

median(ungpd1)

## [1] 42.2.2.2.3.2 Grouped distributions

Median for grouped distribution is exactly the same as that of ungrouped distributions. That is, we begin by identifying whether we have an odd or an even number of distribution, compute location of our median and finally identify class with that position.

Our total frequency is 30, same as before so we know we are looking for a class with the fifteen point five observation.

We generate cumulative frequencies.

| Years | Frequencies | Cumulative frequency |

|---|---|---|

| 1 - 5 | 2 | 2 |

| 6 - 10 | 5 | 7 |

| 11 - 15 | 6 | 13 |

| 16 - 20 | 9 | 22 |

| 21 - 25 | 8 | 30 |

From these (cumulative frequencies) we find 15.5 is in the fourth class, hence median class is “16-20”.

Locating median for grouped distribution in R

Base R does not have a function to compute median for grouped data, but it is not hard to compute it. For odd number of distribution, we can get median of our distribution’s indices, round it up and subset this value from our data. For even number, we can simply get median of our data.

# Median for odd numbered grouped distribution

dat <- rep(as.character(years), freq) # Generate data

dat[round(median(seq_along(dat)))]

## [1] "16-20"

# Median for even numbered grouped distribution

dat[length(dat)+1] <- "0-5" # Add a value to make distribution even

median(dat)

## [1] "16-20"2.2.2.3 Mode

Mode is the most frequently occurring value or category. This is the only measure of central tendency suitable for categorical or qualitative data. This is also the only measure of central tendency which could have more than one value or none at all.

A distribution can have no mode in which case there are repeating/uniform observations, or have one mode thus called “unimodal”, or two modes thus called “bimodal” or more than two modes thus called “multimodal”.

2.2.2.3.1 Mode: numerical distributions

For discrete distributions, to get the most frequently occurring value we need to generate frequencies and then determine which observation has the highest frequency. For continuous distributions, we need to group/categorize observations, we will discuss these distributions in our section on grouped distributions.

As an example of discrete distributions, let’s look at situations where we do not have a mode, have one mode (unimodal), two modes (bimodal) and where we have more than two modes (multimodal).

Uniform Distribution

Uniform distributions have no mode which means all observations are equal. Here is an example of a data set with no mode.

## 65 65 65 65 65 66 66 66 66 66 67 67 67 67 67 68 68 68 68 68 69 69 69 69 69 70 70 70 70 70We can establish lack of mode using a frequency table.

| Value | Frequency |

|---|---|

| 65 | 5 |

| 66 | 5 |

| 67 | 5 |

| 68 | 5 |

| 69 | 5 |

| 70 | 5 |

All observations have the same frequency.

Unimodal distributions

Unimodal distributions have one peek or one most frequently occurring observation. For example, the following distribution:

## 64 65 67 66 64 66 65 65 64 68 66 65 67 64 65 66 64 65 65 64 65 66 65 65 64 64 67 65 64 65 64 66 66 65 66 64 64 63 66 66 67 63 65 66 65 65 66 66 65 65 66 66 66 65 64 64 68 66 64 65 65 66 65 67 65 65 65 66 66 65 66 64 65 65 64 66 63 66 65 64 66 66 64 66 65 65 66 66 64 63 66 67 64 64 64 68 65 64 66 65We can create the following frequency distribution

| Values | Frequency |

|---|---|

| 63 | 4 |

| 64 | 24 |

| 65 | 33 |

| 66 | 30 |

| 67 | 6 |

| 68 | 3 |

From this table, it is clear to see the most frequently occurring value is 65 as it has the highest number of observations (frequency of 33).

Bimodal distributions

Bimodal distributions have exactly two modes. That is, they have two most frequently occurring value. We can see this from the following distribution

## 39 39 40 40 40 40 40 40 41 41 41 68 69 69 69 70 70 70 70 70 70 71 71This distribution has the following frequency distribution:

| Value | Frequency |

|---|---|

| 39 | 2 |

| 40 | 6 |

| 41 | 3 |

| 68 | 1 |

| 69 | 3 |

| 70 | 6 |

| 71 | 2 |

The two modes in this distribution are 40 and 70 as each has six observations.

Multimodal distributions

Multimodal distributions have more than two modes, here is an example of a distribution with three modes.

multimodal <- c(bimodal, rep(72, 6))

cat(multimodal)

## 39 39 40 40 40 40 40 40 41 41 41 68 69 69 69 70 70 70 70 70 70 71 71 72 72 72 72 72 72From the following frequency distribution, we can see there are three modes, 40, 70 and 72.

| Value | Frequency |

|---|---|

| 39 | 2 |

| 40 | 6 |

| 41 | 3 |

| 68 | 1 |

| 69 | 3 |

| 70 | 6 |

| 71 | 2 |

| 72 | 6 |

Getting mode of a distribution in R

R does not have a function to get statistical mode, the “mode” function in R is used to do something else (get internal storage type). However, getting this value is rather easy with knowledge of what mode is.

To get mode we table (make a frequency table) our values, find maximum frequency using function “which.max” and return value using “name” function.

# Data

set.seed(583)

mode1 <- round(rnorm(100, 65))

# Frequency table

table(mode1)

## mode1

## 63 64 65 66 67 68

## 4 24 33 30 6 3

# Mode

names(which.max(table(mode1)))

## [1] "65"2.2.2.3.2 Mode: Frequecy distribitions

In this section we discuss how to get mode for grouped and ungrouped distribution.

2.2.2.3.2.1 Mode: ungrouped distributions

Getting mode for this distribution is the same as getting mode for non-frequency distribution, actually even easier since we have frequencies, we only need to determine which is the highest frequency.

Using our data analyst responses, we can get mode as 4 since it had the highest frequency (11).

In R we only need to use function “which.max” to get mode.

# Frequency table (as a dataframe)

ungpd1Tab

## Usage Freq

## 1 0 2

## 2 1 1

## 3 2 1

## 4 3 6

## 5 4 11

## 6 5 9

# Mode

ungpd1Tab[which.max(ungpd1Tab$Freq), 1]

## [1] 4

## Levels: 0 1 2 3 4 52.2.2.3.2.2 Mode: Grouped distributions

Mode for grouped data is applicable for both categorical distributions as well as continuous distributions. For continuous distributions, categories/groups need to be constructed first.

From these groups we can get modal class the same way we got mode for ungrouped frequency, that is, identify class with highest frequency.

For our data on number of years statistical programs have been in existence, we can easily locate mode as the fourth class “16-20” which has 9 observations.

Locating mode for grouped distribution in R

Using R, we again use function “which.max” and subset class (years).

gpd1$Years[which.max(gpd1$Freq)]

## [1] 16-20

## Levels: 0-5 < 11-15 < 16-20 < 21-25 < 6-10Here is a function for determining modal class given a continuous distribution and breaks. Breaks are cutoff points to which a distribution will be grouped.

mode_grouped <- function(x, breaks, class = TRUE) {

n <- length(breaks)

nms <- sapply(1:(n-1), function(i) paste(breaks[i], "-", breaks[i+1]))

freq <- lapply(1:(n-1), function(i) which(x >= breaks[i] & x < breaks[i+1]))

names(freq) <- nms

if (class) {

names(which.max(sapply(freq, length)))

} else {

which.max(sapply(freq, length))

}

}2.2.2.4 Comparison of measures of central tendecy

We have just concluded a good discussion on measures of central tendency. From it we can compute mean, median and mode of any numeric and frequency distribution. This is certainly great, but do we need to report on all of them? Certainly not, each measure has it’s own merits and demerits as well as it’s applicability. Let’s discuss these aspects.

Central tendency for qualitative distributions

When dealing with qualitative or grouped data, mode is the most appropriate measure of central tendency. Reason, think of a variable such as educational level with high, medium and low, would it make sense to say average level of education is 10.6, what would ten mean and more specifically, what would a point six indicate? Won’t it be more informative to hear “most respondents have high education”.

Mean and median

When data has some extreme values, median is more appropriate than mean. Basic reason for this is that mean uses all values in a distribution while median uses positions of these values. Think of it this way, you can’t compute mean without knowing values in a distribution but you can say where median value is located by just knowing how many values a distribution has.

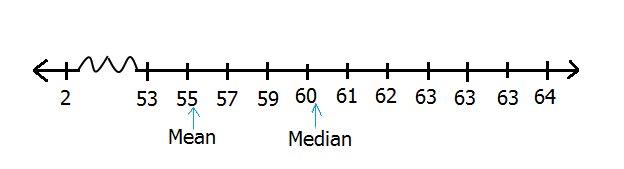

For example, in the following distribution, we have eleven values from 53 to 64. This distribution does not have extreme values.

## 59 59 60 60 61 61 61 61 62 62 62Mean for this distribution is 60.7272727 and median is 61, a difference of 0.2727273. Difference between mean and median is small and any can be used to report centrality of distribution but most analysts in this case would report on mean.

Now let’s add just one extreme value (2) and assess it’s impact on mean and median.

## 2 59 59 60 60 61 61 61 61 62 62 62Now our distribution begins from 2 not 53. When we compute mean we get 55.8333333 and when we compute median we get 61, a difference of 5.1666667. For this distribution, which average would be more appropriate, mean of about 56 or median of about 61?

Comparison of mean and median with extreme value

As shown in the figure above, mean would certainly not be an accurate measure of centrality for this distribution, it is influenced by an extreme value (2). Therefore, when reporting averages for distributions with extreme values, it is meaningful to report median as opposed to mean.

2.2.2.5 Summary - Mean, Median, Mode

Mean is also called average, it is computed as sum of all values divided by number of values. Median is center of a distribution; the value at the middle of a distribution when it is arranged in order. Mode is the most frequently occurring value in a distribution.

Mean, median and mode provide us with a descriptive value for our distribution what we would call a representative value. All three measures can be used to describe numerical distributions (discrete and continuous), but mean and median are more appropriate. When a numerical distribution has extreme values, it is best to use median as a representative value.

For any distribution, there can only be one mean and one median, but there could zero, one (uni-modal), two (bimodal) or more modes (multimodal). When writing a data analysis report we only report the most appropriate statistic.

Sometimes it can be quite informative to present data in frequency tables. These are tables summarizing observations with how many time they occur (frequency). Frequency distributions can either be ungrouped or grouped. Grouped means observations have been categorized or bundled together in form of classes. These classes comprise range of values, how these classes are constructed is important and some considerations must be made. It’s important as analysts to always try to collect data as ungrouped distribution as it is easier to construct groups pre-analysis rather than reconstruct values during analysis. Grouped statistics are estimates and with all estimates, they are not as accurate.

2.2.3 Measures of dispersion (variablity/spread)

Having established a representative value for our data, the next thing we may wish to know is how spread out our data points are and more specifically how far away they are from our representative value.

Why you might ask, well, think of our first data set with scores on statistical program’s performance. We found out our preferred program R with a score of 286 is in the 90th percentile, that meant it had performed better than 90% of the programs. We also have a reported average of about 223 which tells us R is way ahead of average score. This is certainly informative, but as analyst we are abound to want more information. For example we might want to know where the other scores are located, are they clustered around our average score or are they dispersed. Another question we might have is, since 286 is way above mean of 223, could it be an extreme value and what was the minimum and maximum score. Now this is where measure of dispersion comes in.

Other than answering these questions, measures of dispersion can be used to compare distributions with similar measures of central tendency. For example, two distributions can have the same mean but one distribution can have it’s values more spread out from the mean than the other distribution.

When we say dispersion, we mean how spread out our values are in the distribution. Some of the statistics used to measure dispersion are range, inter-quartile range and standard deviation.

In this section we discuss how to compute these measures of dispersion.

2.2.3.1 Range

Range is the simplest measure of dispersion, it is described as the difference between minimum and maximum value. This difference is often reported along side these minimum and maximum values. In general, range tells us how spread out our values are.

For example, to compute range for our data on scores for statistical programs, we begin by arranging values from lowest to the highest value. From this ordered distribution we subset first and last value which become our minimum and maximum values. Range is thus their difference. We can therefore establish maximum score as 287 and minimum score as 156 there difference is 131. When reporting this range, we can do it this way 131 (156 - 287).

Computing range in R

In R, we get range using function “range”, it’s output is a vector with minimum and maximum values. To get difference between the two, use function “diff”.

# Minumum and maximum values

range(scores)

## [1] 156 287

# Range

diff(range(scores))

## [1] 131Range of 131 (156 - 287) out of a possible range of 300 (0 - 300) (possibility of zero to maximum score) tells us scores are not quite spread out. In comparison to R’s score of 286, we can now say R is almost the highest rated program.

In general, range is useful when we want to look at an entire distribution. It is especially informative when comparing dispersion of two or more distribution. For example, we could look at range of two or more class scores to see how well each class did.

Range however is influenced by extreme values, extreme values increase range of a distribution. Take for instance a distribution with values 2, 55 and 65, range for this distribution is 63 (2, 65), this dispersion is wider because of value 2, if we excluded it we would get a range of 10 (55, 65). Because of this shortfall, inter-quartile range are often reported.

2.2.3.2 Inter-quartile Range

Inter-quartile range (IQR) is a range of where 50% of a distribution lie. For distributions with outliers, it is best to report it’s IQR than it’s range.

IQR is simply third quartile minus first quartile (Q3 - Q1). Q1 is the 25th percentile or location below which 25% of values lie and Q3 is the 75th percentile or location above which 25% of data lie.

We have discussed how to get quartiles as well as how to show interquartile range when constructing box plots. Here let’s discuss how IQR can inform us on spread of a distribution.

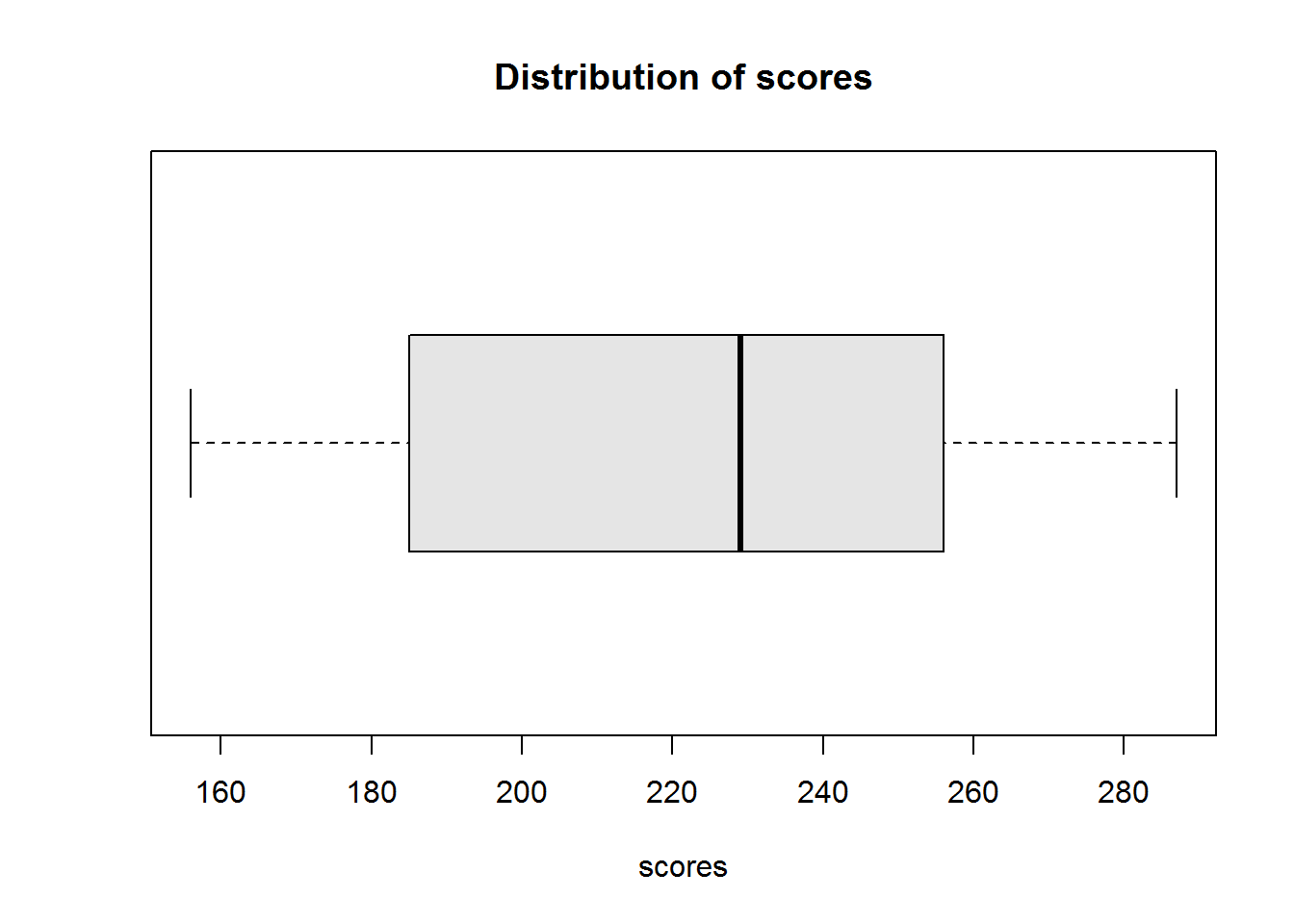

From our scores on statistical programs, we can compute Q3 as 256 and Q1 as 185 therefore IQR is 71. So we can report 50% of our data lie between 185 and 256. We can also show this using a box plot.

boxplot(scores, horizontal = TRUE, col = "grey90")

title("Distribution of scores", xlab = "scores")

Figure 2.3: Scores distribution

From this box plot, we can also establish there are no outliers.

Note, IQR is a range and not a scalar (single value), hence it’s best to report both range and difference like “131 (156 - 256)” or simply the range alone “156, 256” but not “131”, it’s simply not communicative.

Like range, IQR is not the best measure of dispersion as it does not take into account other values in a distribution other than quartiles, minimum and maximum values. A more robust measure of dispersion is standard deviation.

2.2.3.3 Variance and Standard Deviation

One of the questions we sort to find out when we started this section on measures of dispersion is how far our data points are from the mean, to answer this question we use standard deviation which is derived from variance.

Quite simply, standard deviation is the average distance of values of a distribution from it’s mean. The greater this distance, the greater the standard deviation and it’s dispersion. The shorter this distance, the short is standard deviation and it’s dispersion.

In general, standard deviation try’s to establish an indicator of where each data point is from the mean. Hence we compute standard deviation (which we shall refer to as “sd”) as summation of difference of each data point from it’s mean divided by total number of values (basically mean of the distances from the mean).

But before we sum these differences, we need to take note that summing deviations from the mean would result to zero since values above the mean would cancel values below the mean. To overcome this, we need to turn negative deviations to positive values. Two way to turn these values to non-negative values is by getting absolutes or squares. Squaring is preferred as it has some mathematical properties which are easier to work with, hence, before summing deviations we need to square them first.

Once we take an average of the sum of squared differences, we would have computed a measure of dispersion called “variance”. Variance is therefore the average/mean sum of squared deviations.

Variance is a squared deviation of data points from the mean, but what we need are the deviations. To get these deviations we take square root of variance which becomes our standard deviation.

Using our score’s data set, let’s go step by step to compute variance and standard deviation before seeing how easy it is to get both statistics in R.

Step 1: Compute mean

We compute mean as 223.3636364.

Step 2: Get squares difference from the mean

Our squared difference are:

cat(paste(paste0("(", scores, "-", round(mean(scores), 2), ")^2"), "=", round((scores - mean(scores))^2, 2), collapse = "\n"))

## (174-223.36)^2 = 2436.77

## (286-223.36)^2 = 3923.31

## (287-223.36)^2 = 4049.59

## (236-223.36)^2 = 159.68

## (211-223.36)^2 = 152.86

## (156-223.36)^2 = 4537.86

## (232-223.36)^2 = 74.59

## (188-223.36)^2 = 1250.59

## (182-223.36)^2 = 1710.95

## (276-223.36)^2 = 2770.59

## (229-223.36)^2 = 31.77Step 3: Compute variance (average sum of squared deviations)

Sum of squared deviation is 2.109854510^{4} and average sum of squared deviations is 1918.0495868.

Step 4: Compute standard deviations

Standard deviation is simply square root of variance which is 43.795543.

Before we see how to compute variance and standard deviation in R, there is an important issue to note when computing variance, this is bias and unbiased variance.

Biased and unbiased variance

When dealing with sample data, our goal is to estimate true values of a population. So when dealing with sample statistics (estimates), we expect it to represent population parameters from where it came from.

When we say “expect”, we mean that on repeated experiments, on average the estimator equals true parameter. This is often referred to as expectation of an estimator.

When an expected estimator does not reflect it’s true population parameter then it is said to be biased. An expected estimator is said to be unbiased if it equals true population parameter.

Mathematically, it has been proven that expectation sample mean is an unbiased estimator of population mean. It’s also been proven that sample variance is a biased estimator of population variance. The latter occurs as sample variance tends to underestimate variance.

To correct a biased sample variance, a normalization factor is used. This factor increases mean squared deviations (MSE) by dividing squared deviations with “n - 1” instead of “n”. This technique is called “Bessel’s correction” and it is used when population mean is unknown.

In summary, when population mean is unknown, we compute sample variance as sum of squared deviations divided by number of values minus one. We can express this mathematically as:

\[S^2 = \frac{\sum\limits^{n}_{i=1}(x_i - \bar{x})^2}{n - 1}\]

Where:

\(S^2\) = Sample variance \(x_i\) = Sample observations (values) \(\bar{x}\) = sample mean \(n\) = Number of observations (values)

Population variance remains the same, that is:

\[\sigma^2 = \frac{\sum\limits^{N}_{i=1}(X_i - \mu)^2}{N}\]

Where:

\(\sigma^2\) = Population variance \(X_i\) = Population values \(\mu\) = Population mean \(N\) = Total population (sum of all population values)

Based on preceding discussion, assuming our scores are sample data and we do not know population parameters, then correct sample variance is 2109.85 to the nearest 2 decimal places (2dp)) and standard deviation is 45.93 (2dp).

Computing variance and standard deviation in R

To compute variance in R we use function “var”. Note, R uses (corrected) sample variance. To compute sample standard deviation we simply use function “sd”, which is the same as square rooting variance “sqrt(var)”.

# Variance

var(scores)

## [1] 2109.855

# Sd, either

sqrt(var(scores))

## [1] 45.93315

# Or simply

sd(scores)

## [1] 45.93315Interpreting Standard Deviations

Note, here we want to interpret standard deviation and not variance. Variance is not meaningful, we only use it to compute standard deviation.

The whole point of computing standard deviation is to measure a distributions dispersion from it’s average. A high standard deviation means distribution is spread out, a low standard deviation means values are centered around the mean.

For our scores data set, we found a standard deviation of about 46, this is certainly high, hence we can say values in this distribution are spread out.

When reporting, we report both mean and standard deviation like this, “Scores had a mean of about 223 with a standard deviation of 46”.

2.2.3.4 Displaying Dispersion

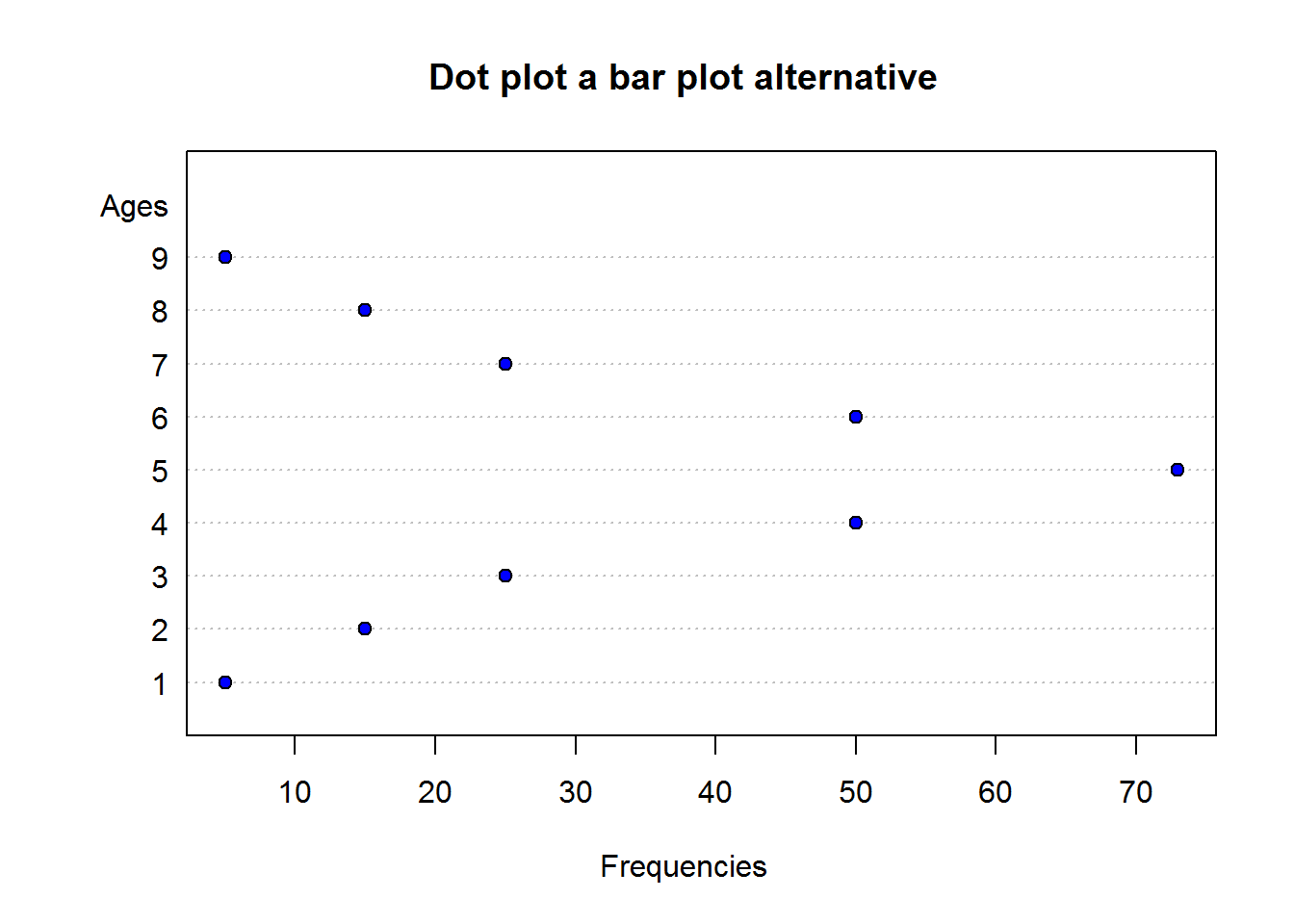

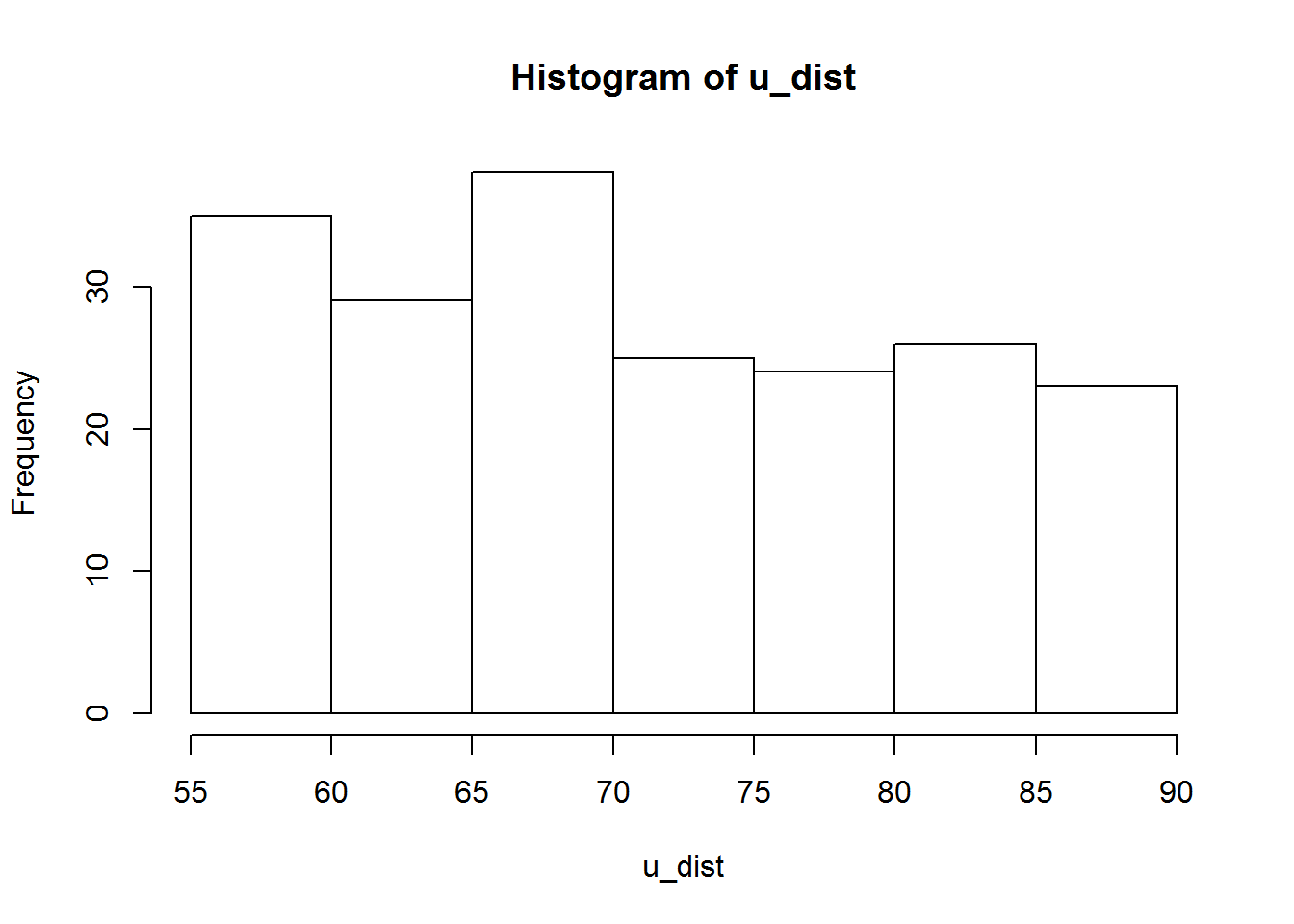

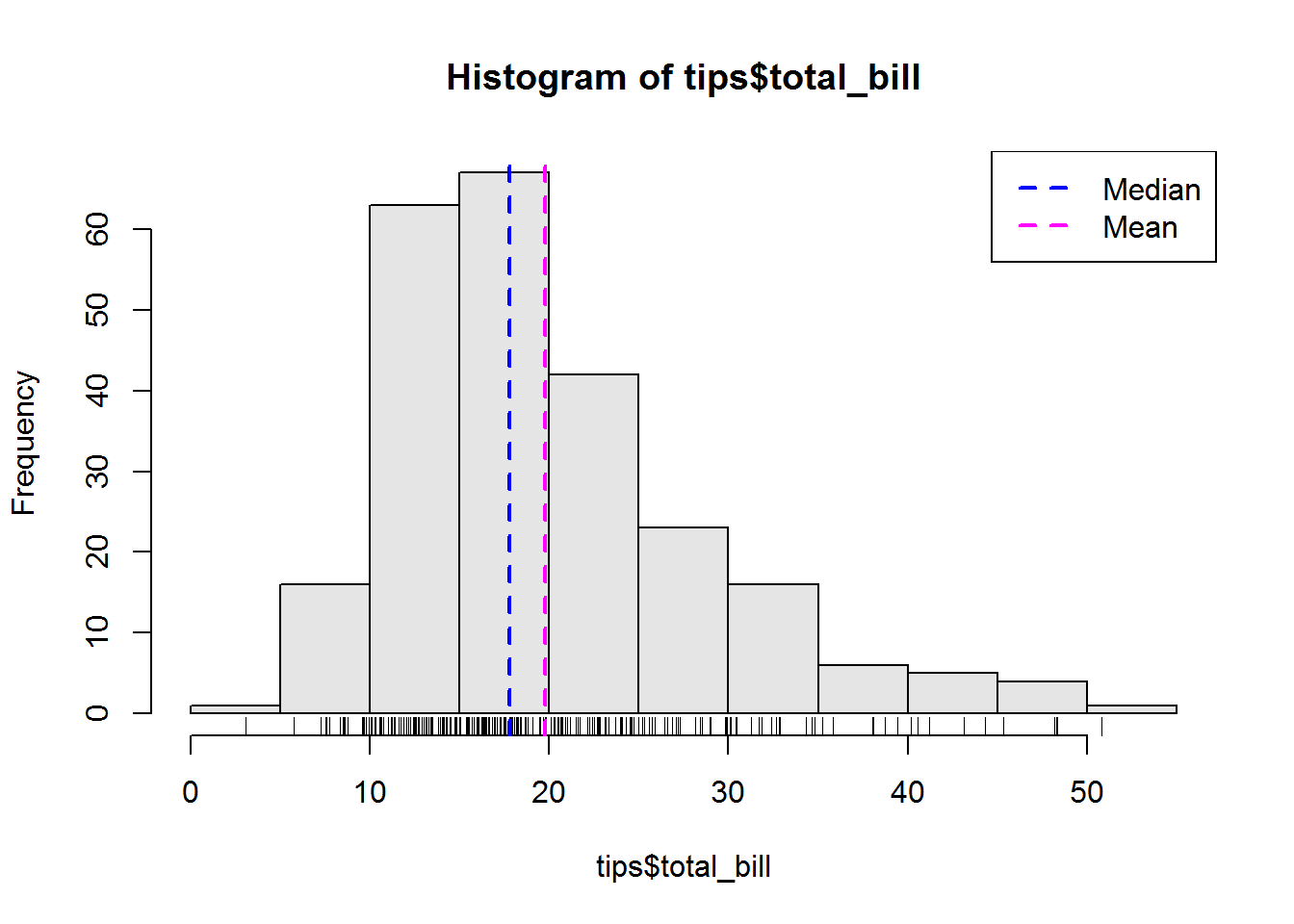

There are a number of graphical displays that show a distribution’s dispersion, some of them include dot plot, stem-and-leave plot and histogram.

A dot plot is one of the simplest plots to develop, it displays values in a distribution. It involves plotting values on a scale, these values are stacked-up to look like bar charts (vertical rectangle boxes). This plot is used when there few data points(roughly around 30 values). For data sets with more values, stem-and-leave or histogram is appropriate. One advantage a dot plot has over most graphs is it’s preservation of numerical information (it is easy to see each value/data point). There are different variants of dot plots, original dot plot stems from 1880’s, called “Wilkinson’s dot plot” and a newer dot plot suggested by Cleveland ( a variation of a bar plot).

A stem-and-leave plot shows frequencies for which values occur in a distribution. They are sort of table where each value is split into a “stem” (the first digits) and a “leaf” (usually the last digits). They show ordered distribution which makes it easy to read and interpret. They are most appropriate for distributions with about 15 to 150 data points. Anything below 15 would not show distribution well and anything above 150 might be to clustered.

Histograms, these are perhaps the most widely used graphical displays for continuous distributions. A histogram is graph with rectangular boxes representing either frequencies or proportions. Rectangles represent “bins” which are range of values or intervals split from a distribution. The number of bins a histogram can have depend on a distribution, there is no ideal number as long as the bins show different features of the data. One of the advantages of histograms is it’s ability to show distributions with many values.

In this section we will use original dot plot to show distribution of our variables.

Dot Plot

To generate a dot plot, draw a number line or a scale from minimum to maximum value and then mark a dot on each number there is a value. If there are more than one value, then stack the dots on top of each other.

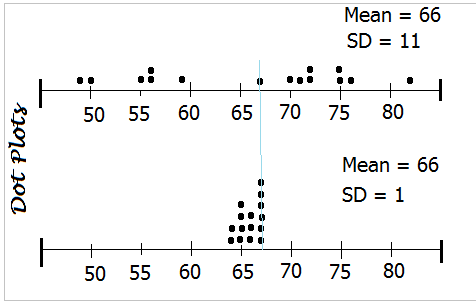

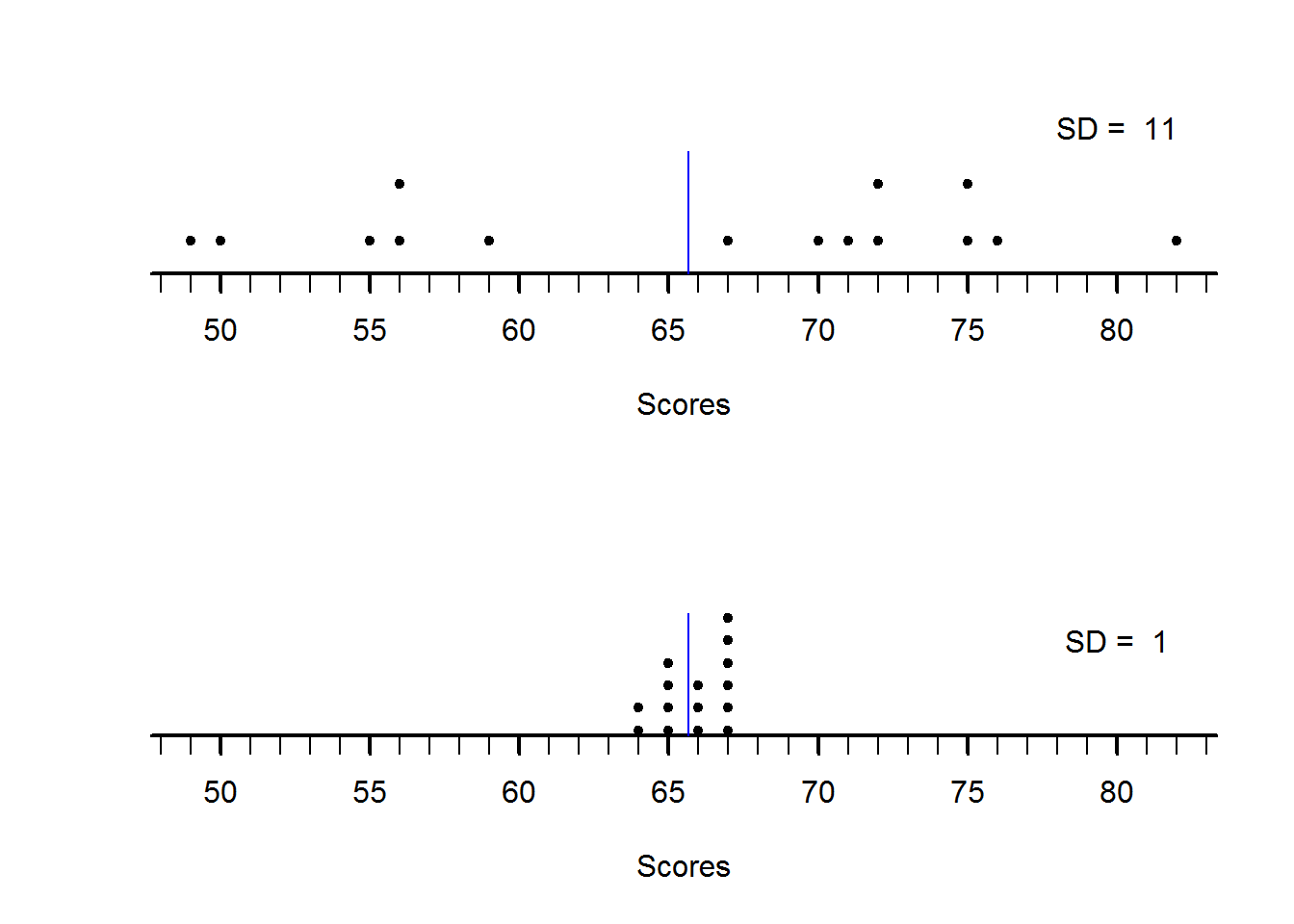

Let’s look at two distribution with the same mean but different standard deviations. We are interested in seeing how standard deviation informs us of a distribution’s spread.

## First distribution: 49, 50, 55, 56, 56, 59, 67, 70, 71, 72, 72, 75, 75, 76 and 82. Mean is about 66 and Standard deviation is about 11.

## Second distribution: 64, 64, 65, 65, 65, 65, 66, 66, 66, 67, 67, 67, 67, 67 and 67. Mean is about 66 and Standard Deviation is 1.If we draw these two distribution using the same scale, we should see something like this:

Dot plots

Unfortunately R does not have a function to generate this kind of plot, function “dot chart” produces a Cleveland’s dot plot which is basically a bar chart with dots instead of rectangular boxes.

But not to worry, we can easily generate our own plot given our understanding of plot function (discussed in chapter eight of “R Essentials”).

# Set canvas to 2 rows and 1 column

op <- par("mfrow")

par(mfrow = c(2, 1))

# Generate frequency indices

y1 <- c(rep(1, 4), 2, rep(1, 5), 2, 1, 2, rep(1, 2))

y2 <- c(1:2, 1:4, 1:3, 1:6)

# First plot

plot(scores4, y1, type = "n", axes = FALSE, ylim = c(0.5, 2.5), xlab = "Scores", ylab = "")

axis(side = 1, at = seq(45, 85, by = 1), labels = FALSE)

axis(side = 1, at = seq(45, 85, by = 5), labels = FALSE, lwd = 2)

mtext(seq(50, 80, by = 5), side = 1, line = 1, at = seq(50, 80, 5))

axis(side = 2, labels = FALSE, tick = FALSE, lwd = 0)

text(x = 80, y = 3, labels = paste("SD = ", round(sd(scores4))), xpd = TRUE)

abline(v = mean(scores4), col = 4)

points(x = scores4, y = y1, pch = 20)

# Second plot

plot(scores5, y2, type = "n", axes = FALSE, xlab = "Scores", ylab = "", xlim = range(scores4))

axis(side = 1, at = seq(45, 85, by = 1), labels = FALSE)

axis(side = 1, at = seq(45, 85, by = 5), labels = FALSE, lwd = 2)

mtext(seq(50, 80, by = 5), side = 1, line = 1, at = seq(50, 80, 5))

axis(side = 2, labels = FALSE, tick = FALSE, lwd = 0)

text(x = 80, y = 5, labels = paste("SD = ", round(sd(scores5))))

abline(v = mean(scores4), col = 4)

points(x = scores5, y = y2, pch = 20)

Figure 2.4: dotplots

par(mfrow = op)From our two plots, it is clear to see how they differ in terms of dispersion. First plot with sd of about 11 is more spread out than second plot with sd of about 1 despite them having a mean of about 66.

2.2.4 Shape of distributions

Shape of a distribution is an important concepts in statistics as it informs on observations balance around it’s center and presence of outliers. This balance around it’s mean (symmetry) and presence or absence of outliers is often used to determine statistical methods of inference. Based on this fact, this section aims to introduce a quantitative measure for shape of sets of points called “moments”, more specifically we will look at the third (skewness) and fourth (kurtosis) moments.

In statistics, moments are measures used to describe a distributions shape in terms of it balance from a central point (mean). Statistical moments can show a distribution that is symmetric or asymmetric. It can also inform us which side of the distribution has trailing observation as well as symmetric distributions with heavy tails.

Moments have incremental measures referred to as orders and they begin from Zero up to higher order moments. The first five statistical moments are most commonly used to describe a distribution’s shape. These moments are:

- Zeroth moment - Total distribution

- First moment - Mean

- Second moment - Variance

- Third moment - Skewness

- Fourth moment - Kurtosis

Statistical moments are based on concept of probability, for example the zeroth moment is a probability distribution function equal to one. That is, summation of all chances of observing a value in the distribution will total to 1. For example, for a discrete variable like a coin toss, there are only two outcomes, heads or tails, the chance of observing either a head or a tail for a fair coin is equal, that is 1/2 (or 0.5). Summation of these two chances equals 1. For a continuous variable such as having a student with a given height is not easily computed as that of a discrete variable. This is because continuous variables can take on any value within a range. When we reach chapter four on statistical inference and specifically probability, we will get to know “probability density function” which uses integrals to compute probability of continuous variables. At this point what we should grasp is that for both discrete and continuous variable summation of all possible chances equals 1.

With the understanding that statistical moments are based on probability, when we talk of mean we are referring to mean of a discrete variable given \(\sum_xP_{(x)}\) and mean for a continuous variable given by \(\int xf_{(x)}d_{x}\). We will not get into defining these two function as we will get to discuss them at length later, but is important to know what we mean when we say first moment.

An important issue to know as we discuss statistical moments is “expectations” as we shall often refer to them. This concept is also a probability concept which simply means a predicted “average”. To understand this concept, think of dice throws, if we threw a pair of dice many times, then in the long-run the chance (probability) of observing a pair of numbers like 2 and 3 would normalize to a certain value. That is, on average, given the many throws, we expect to see numbers 2 and 3 say 20% of the time. This average is what we call “expected value” and we will later note that this is based on “laws-of-large-numbers”. Mathematically, expectation is denoted by \({\mathbb E}\), so when we write \({\mathbb E}(x)\) (expectation of x), we simply mean average of variable \(x\).

Each of the moments is defined by a mathematical formula that is a geometric series 7 and more specifically an exponential function. Exponential function are basically a base number raised to a certain number like \(2^4\). Exponential functions tend to grow over time or in this case values, example, take base 2 and raise it to exponents 0 through to 5, you should get 1, 2, 4, 8, 16, 32. The basic idea of letting these values grow is to be able to give more weight to values that are far from the mean. By doing so, we are able to determine just how far they are and how much they weigh (weight of the tails). It is with this reasoning that we raise zeroth moment with 0, first moment (mean) with 1, second moment (variance) with 2, third moment with 3 and fourth moment with 4.

The basic formula for a moment is:

sth moment =

\[\frac{(x_1^s + x_2^s + x_3^s + ... +x_n^s)}{n}\]

Where:

s = Number of moment (like 1 for mean) n = Total number of observations

This formula can also be expressed simply as \({\mathbb E}(x)^s\).

In statistics, our values \(x\) are in reference to a particular point, hence they will be subtracted from this reference point.

Zeroth moment

Zeroth moment as mentioned earlier would sum-up to one. It is given by \({\mathbb E}((x - \bar{x})^0)\) (expectation of deviations raised to 0) or \(\sum(x - \bar{x})^0\).

It is good to note that 0 is considered point of equilibrium (perfect balance) for any distribution. This point of equilibrium is referred to as origin.

First moment

As mentioned, first moment is the mean and it is defined as a “raw moment”. Without going into too much detail, raw moments are in reference to “origin” zero.

So mean (first moment about origin) is given by:

\(\bar{x}_1^` = {\mathbb E}[(x - 0)^1]\) = \({\mathbb E}[(x)^1]\)

\[\therefore \bar{x} = {\mathbb E}(x)\]

The symbol \(`\) on top of \(\bar{x}\) means it a raw moment.

Second moment

At this point we should be able to see a pattern in these moments. Each moment is raised to an exponent the same as it’s number and so far we have been making reference to the origin (distances from 0).

Now, from second moment we will start making reference to mean rather than it’s origin. That is, distance of each observation from it’s mean. We are doing this because the rest of the other moments relate only to spread and shape rather than it’s location (where it is from origin). It is for this reason (getting distances from the mean) that second, third, fourth and higher moments are defined as central moments.

Therefore we can express second moment as \({\mathbb E}[(x-\mu)^2]\) for population variance and \({\mathbb E}[(x-\bar{x})^2]\) for sample variance. Alternatively, these can be expressed as:

Population Variance:

\[\sigma^2 = \frac{\sum(x - \mu)^2}{n}\]

Unbiased sample variance:

\[s^2 = \frac{\sum(x - \bar{x})^2}{n-1}\]

Third moment

Third moment is Skewness, it measures a distribution’s symmetry. This moment will tell us if there are trailing values at either extremes of a distributions or not. We will discuss this a little more below, but here let’s look at it’s formula as a way to complete our discussion on statistical moments.

Following our second moment, we know this moment should be raised to three and it should be referenced to our average (\(\mu\) or \(\bar{x}\)). Therefore third moment is given by \(m_{3} = {\mathbb E}[(x-\mu)^3]\) or simply

\[m_{3} = \frac{\sum(x-\mu)^3}{n}\]

Where:

\(m_3\) = Third moment \(x\) = An observation \(\mu\) = Mean \(n\) = Number of observations

Notice \(m_3\) is the average cubed distance from the mean, for variance it was average squared distance from the mean. By taking cubes, we ensure direction of these distances are maintained, that is observation below the mean would be negative and those above the mean would be positive. For a perfectly symmetric distribution, values below the mean and those above the mean would cancel each other thereby resulting to zero. However, if values below or above are more than the other, then we would have a asymmetric (skewed) distribution.

When making comparison between different distributions with different size of unit of measure, this moment is bound to give unreliable measure. To understand this, take an example of the following two test scores:

## First test score: 74, 79, 41, 58, 97, 93, 40, 61, 46, 49, 59, 54, 94, 82 and 70

## Second test score: 37, 39.5, 20.5, 29, 48.5, 46.5, 20, 30.5, 23, 24.5, 29.5, 27, 47, 41 and 35The first test score is out of 100, and the other is out of fifty, but test score two is actually half of test score 1 so we should expect \(m_3\) to be the same for both distributions. But this is not so as:

## m3 for first test score is 1474.977

## m3 for second test score is 184.3721Difference between these two \(m_3\) comes from unequal size of unit of measure (100 and 50). To correct this we use a statistical concept known as “standardization”. Standardization basically means creating unit-less measures which are used to compare observations of different units or unit sizes.

In this case we will use standard deviation (average distance from mean) to standardize deviations. That is, we will take each deviation as a fraction of standard deviation. We will then take the cube route of these standardized deviation before summing the up. By doing so we would have “standardized moments”.

Mathematically we can express this standardized moments as:

\[m_3 = \frac{{\mathbb E}[(x-\mu)^3]}{\sigma^3}\]

Using this new standardized measure, we should get \(m_3\) for both scores as 0.2032448.

Fourth moment

Like \(m_3\), \(m_4\) or fourth moment is a standardized moment, meaning deviations are fractions of distributions standard deviation. Thus it is express as:

\[m_4 = \frac{{\mathbb E}[(x-\mu)^4]}{\sigma^4}\]

For standardized moments, they are expressed in terms of standard deviations from mean. By taking fourth power means we are eliminating negative deviation, but at the same time giving more weight to extreme values than those close to the mean. In particular, values that are less than one standard deviation away will have lower \(m_4\) but values than are 1 standard deviation away will have higher \(m_4\). For example, a value that is 0.5 standard deviation away will have \(m_4\) of 0.0625 while a value that is 1.5 standard deviation away will have \(m_4\) of 5.0625.

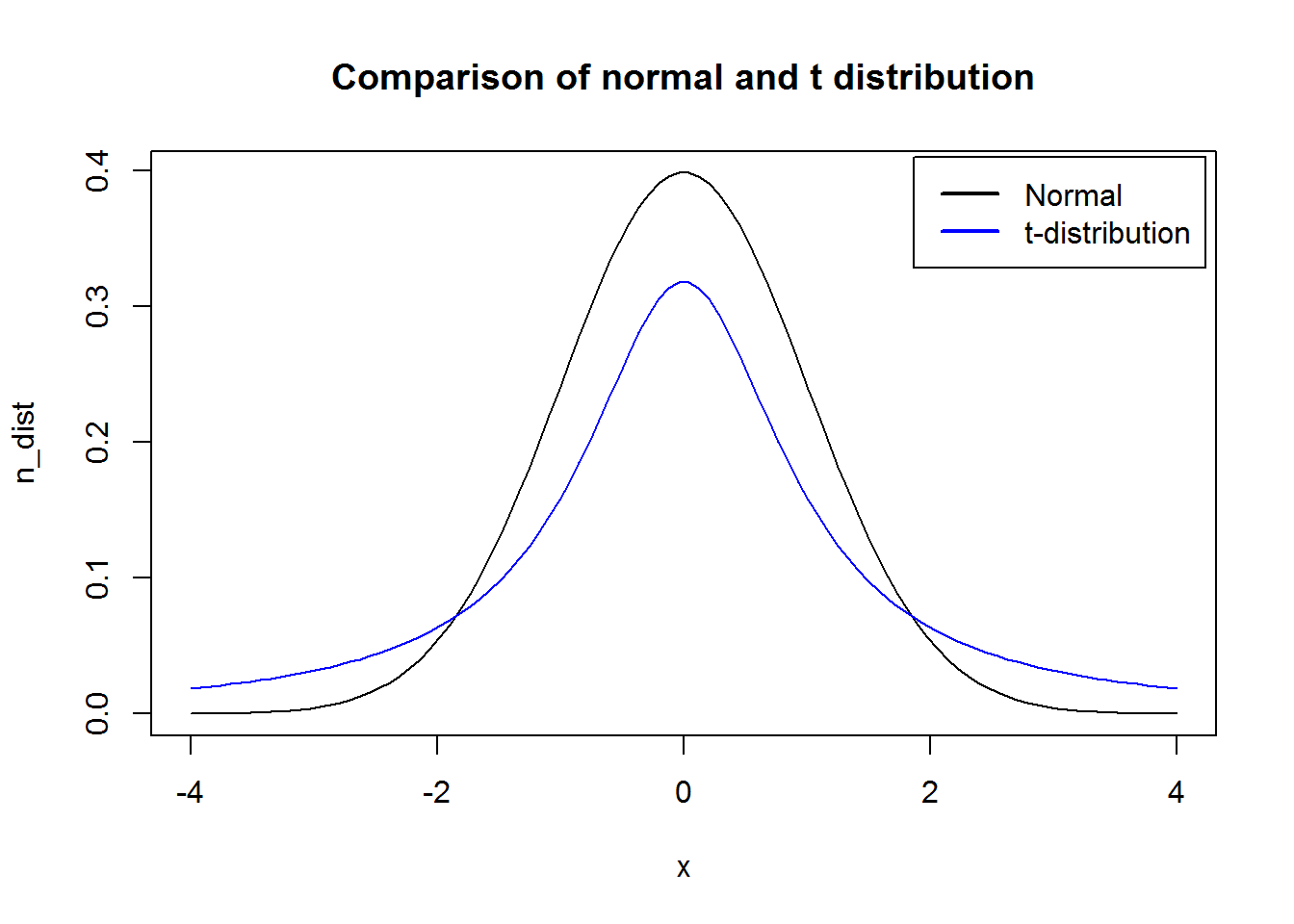

2.2.4.1 Skewness

Skewness in very basic terms is tendency of some values in a distribution to be located at the extreme points (minimum/maximum) of it’s distribution. When a distribution has most of it’s values centered around it’s average but has trailing values either to the left or right, then it is said to be skewed. Trailing values are often referred to as tails (from a visual point of view).

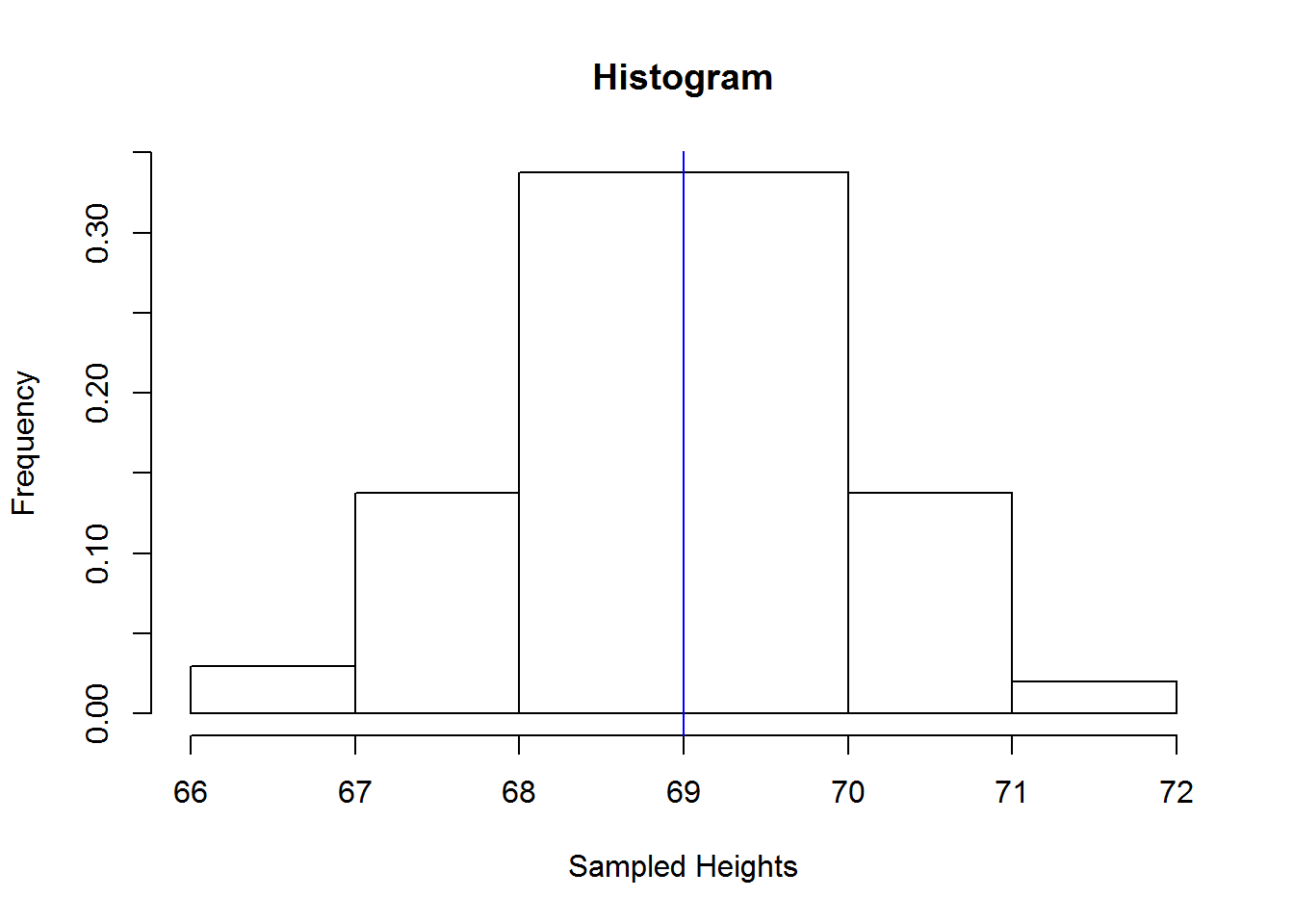

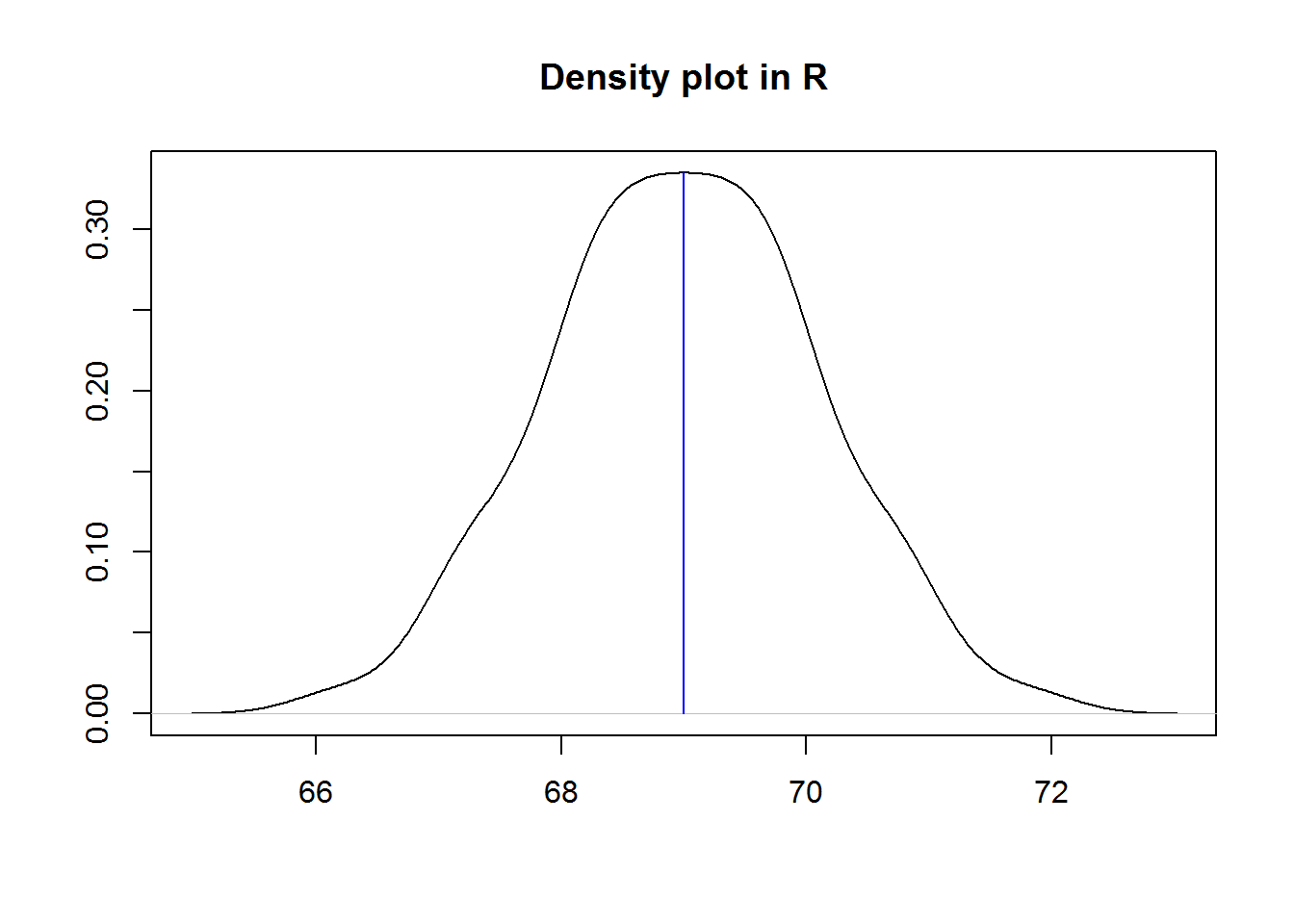

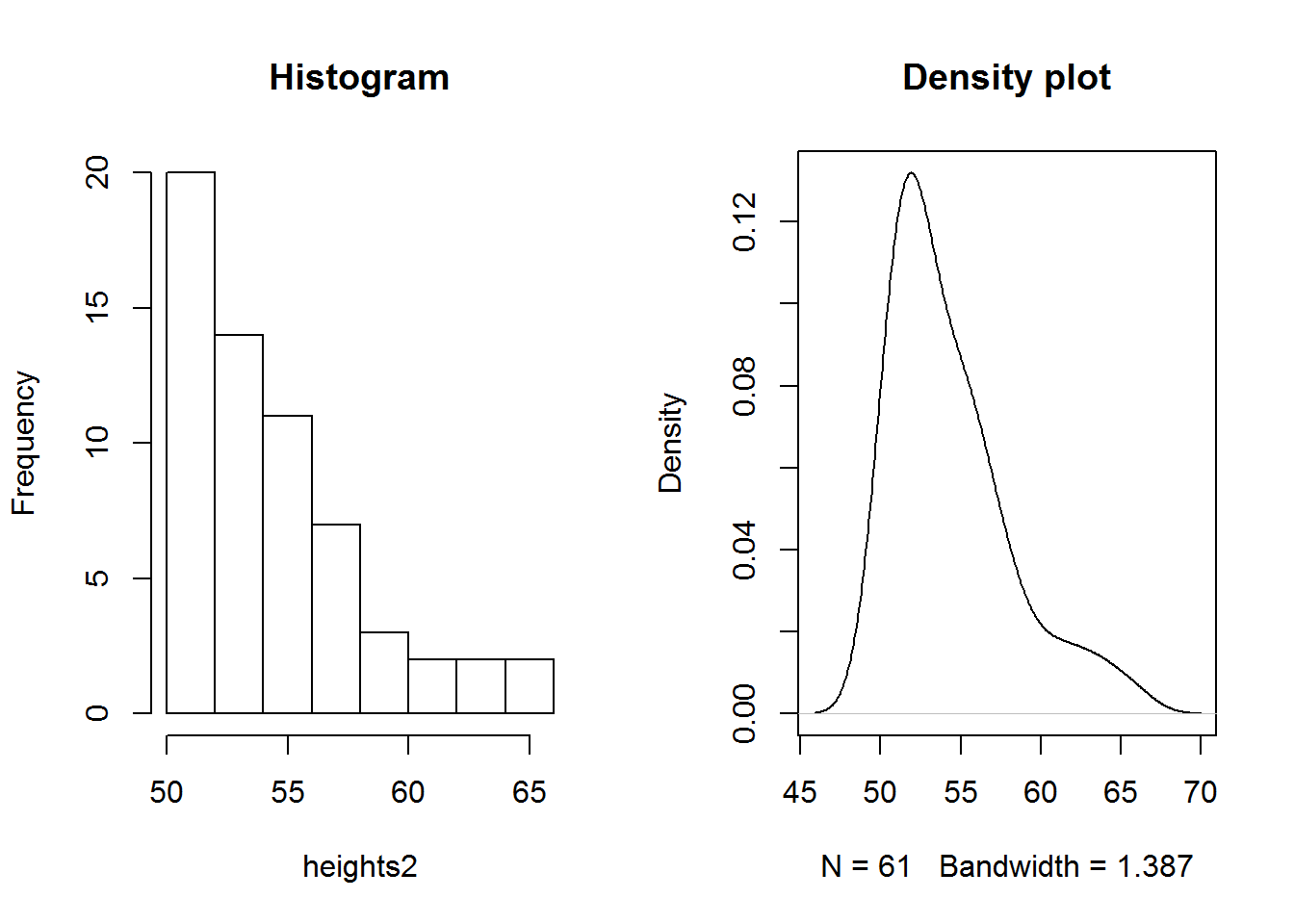

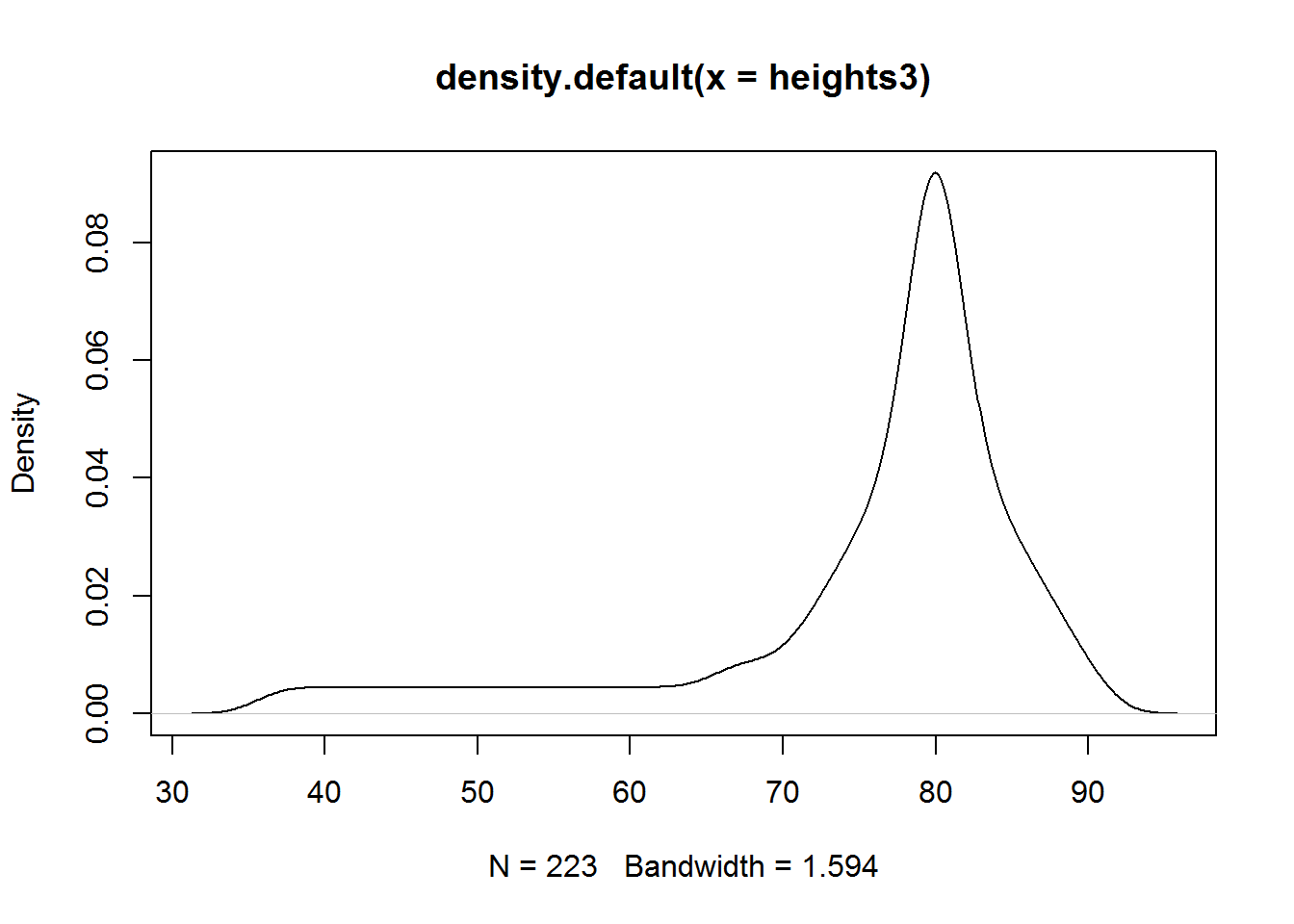

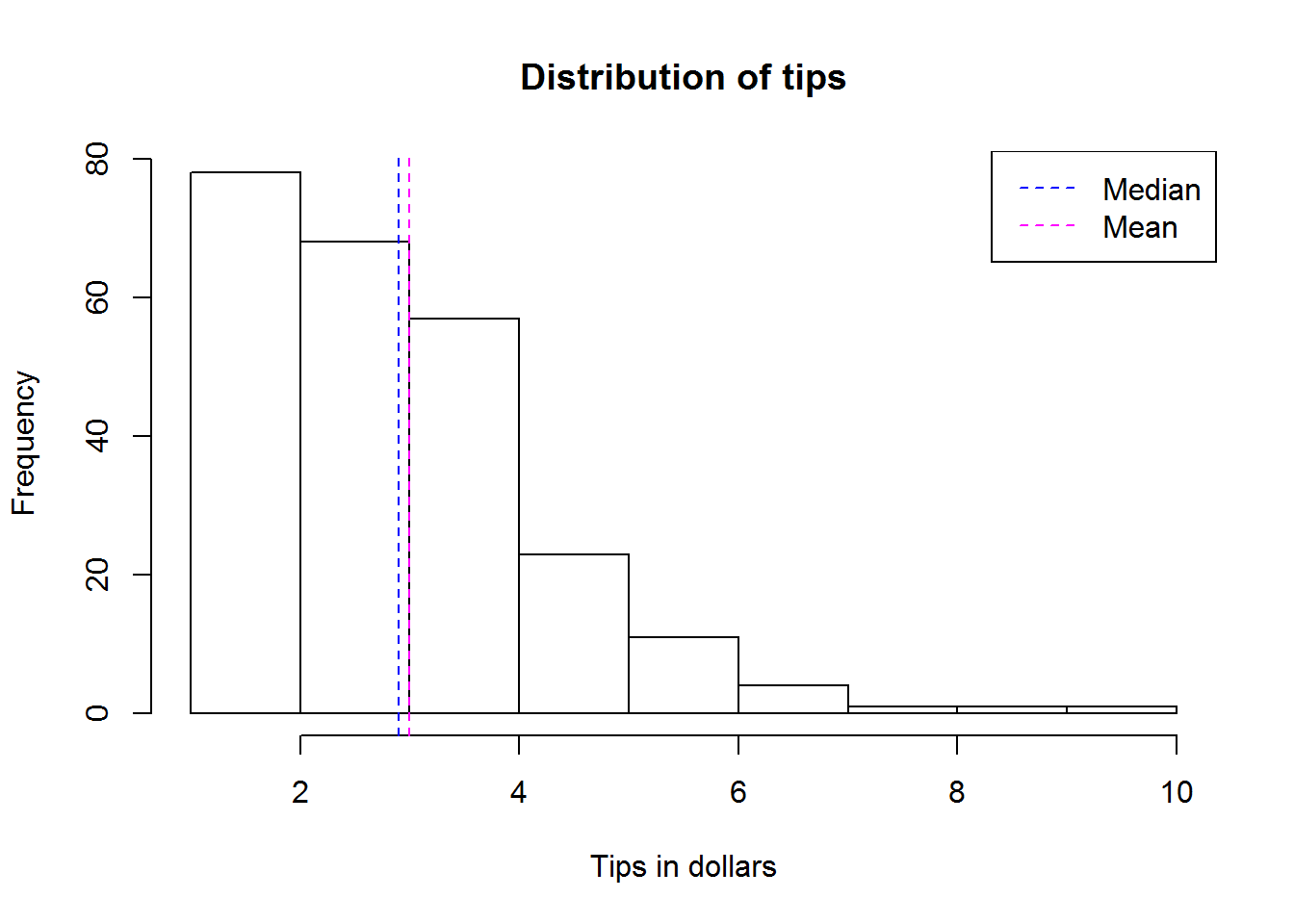

Skewness also means there is lack of symmetry (they are asymmetrical). Symmetry means there is a perfect balance to the left and to the right of a distribution’s average: two half’s of the distribution are a mirror image of each other. Symmetric distribution have their measures of center (mean, median and mode) equal or almost equal. Graphically, they are often referred to as “bell-shaped” curves.